Human Factors Breakdown: C-17 Crash at Elmendorf

Last week, we looked at a fatal C-17 accident at Elmendorf in 2010. I now want to focus on the analysis of the situation leading up to the stall. It’s clear that the flight crew did not react to the warning signs as they attempted their manoeuvres at low altitude without reaching their target airspeed. What isn’t immediately clear is why no one reacted.

The investigation was quickly able to show that the maintenance on the aircraft was in order and there was no evidence of mechanical, structural or electrical failure that could have caused the loss of control. There was no evidence of medical issues or any signs of incapacitation for any of the crew. Every crew member was experienced and well respected, none of them had life style factors which could have been relevant, and there was no evidence to suggest that any of the crew were fatigued or inadequately rested.

So the first obvious question is why did no one on the flight react to the stall warner when it activated? The commander did not make any attempt to increase airspeed or initiate recovery. The first officer did not call out that he’d retracted the slats. The safety officer did not react or seem to expect anyone else to react. The reason for this was simple and sad: The commander of the aircraft didn’t believe that stall warnings were relevant and had told the crew to ignore them.

This was standard procedure for his flights: the commander routinely gave the instruction to ignore stall warnings during aerial demonstrations. He said in training that stall warnings were an “anomaly,” inaccurate and transitory, and that the stall warnings would stop when the turn was completed and believed that the aircraft would not be adversely affected. He had flown numerous aerial demonstrations in the C-17 with the stall warnings active and without incident.

There’s a phrase we’ve talked about before, normalisation of deviance, where someone takes a short cut or disregards a procedure and nothing goes wrong and so they continue to do so, until no one much notices any more. Over time, safety and security requirements erode as people quickly become accustomed to this behaviour and stop seeing it as deviant, even though the actions can end up far outside of their personal boundaries for safety.

As Mendel said in the comments, the routine had been modified by the commander in order to create a better show. But those modifications were never approved and, more importantly, they would never have been approved if anyone had been paying attention. Each of the commander’s changes pushed the aircraft closer to its operational limits. The changes to the profile slowly but surely eroded the safety buffer built into the routine.

This can be seen a bit more clearly by going over the individual factors as defined by the final report.

Causal Factors

The first causal factor was straight forward procedural error, starting with the fact that the commander had replaced the approved aerial demonstration procedure with his own techniques in order to increase the impact. He chose to climb steeper, level out sooner and turn steeper than the manoeuvre called for.

Energy management is the most critical factor in aerobatics and every single change that the commander made affected the amount of energy available. If the aircraft does not maintain sufficient speed and altitude, it cannot sustain controlled flight.

On that final flight, the commander climbed away from the runway at a pitch angle of 40° instead of focusing on minimum climb-out speed. As a result, the C-17 only reached 107 knots in the climb, instead of the target of 133 knots. He then levelled out at 850 feet instead of continuing to the minimum altitude of 1,500 feet. He maintained full right rudder and control stick pressure for the 80/260 reversal turn, increasing the bank angle from 45° to 60°. All of these changes reduced the amount of energy available to the aircraft.

The manoeuvre was meant to demonstrate the aircraft capabilities but the commander instead pushed the C-17 to its maximum performance at the threshold of a stall, the very definition of a low energy state.

The second procedural error is the obvious one: the commander never implemented proper stall recovery procedures.

The operating manual gives the following steps for C-17 stall recovery:

- apply forward stick pressure

- apply maximum available thrust

- return or maintain a level flight attitude.

Large rudder inputs, it says, should be avoided.

Instead the commander continued to execute his 260° turn. He consistently maintained control stick pressure and he did not return to a level flight attitude, first continuing the turn and then attempting to turn in the other direction. Throughout, full rudder was applied, first right rudder and then, in the deep stall, full left rudder.

The commander’s reaction to the stall is actually a very good example of what the investigators defined as the second causal factor: overaggressive.

Overaggressive is a factor when an individual or crew is excessive in the manner in which they conduct a mission.

The pilot of the flight was the commander and responsible for all aspects. He planned, briefed and flew the sortie with the intent of pushing the C-17 to maximum performance. His choice of bank angles, altitudes, timing and rudder usage all contributed to the aircraft entering a deep stall from which he could not recover.

The investigators found that the commander had regularly planned and flown the 80/260 manoeuvre using 60° of bank with full rudder, instead of the 45° bank angle recommended for the manoeuvre, in order to tighten the turn and keep the display as close to the crowd as possible.

On that day, he’d planned an initial climb-out of 1,000 to 1,500 feet with a 35-40° nose high attitude. Normally, that climb-out would be flown based on minimum climb-out speed, with the nose-high attitude of about 25-35°.

The commander had become overaggressive, excessively pushing the parameters of the flight beyond recommendations and accepted limits. In other words, the crash was caused by the commander deliberately planning and executing the flight without following the procedure for the manoeuvre.

Contributing Factors

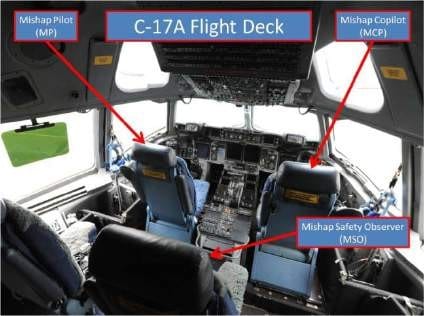

The contributing factors are focused on the lack of reaction once things started going wrong, focusing on the human factors affecting the flight crew during the sixty-second flight. It is interesting because it describes a number of different types of human factors to help us to better understand what happened in the cockpit.

Caution/Warning — ignored is when a caution or warning is perceived and understood by the individual but ignored by the individual leading to an unsafe situation.

Challenge and Reply is when communications did not include supportive feedback or acknowledgement to ensure that personnel correctly understood announcements or directives.

When the stall warner sounded, the Pilot Monitoring called out “Temperature, altitude, lookin’ good.” Clearly he was monitoring the systems, however, he did not see the stall warning to be worth commenting on. At the same time, the commander did not adjust his control inputs, for example releasing pressure on the control stick or reducing the bank angle, after the stall warner sounded. Neither the Pilot Monitoring nor the Safety Officer made any comment regarding recovery from the impending stall until after the aircraft was stalling.

These are the actions in the cockpit that contributed to the fatal crash but the investigation also looked into the history of the crew to try to understand why the stall warning was ignored and the personnel did not consider it to be a problem.

Two training issues were brought to light. The commander routinely instructed flight crew that stall warnings could be ignored during demonstrations, effectively that the warnings did not apply to them.

The second issue is that the commander had instructed the Pilot Monitoring to retract flaps and slats automatically, without a challenge or reply. On that day, the Pilot Monitoring retracted the flaps and slats as he’d been trained to do, silently. However, the C-17 was low and slow after the climbout and the flaps and slats retraction affected the angle of attack, bringing the aircraft closer to the stall. We can’t tell if the commander and the safety officer knew that the configuration of the aircraft had changed.

Channelized Attention is when the individual is focusing all conscious attention on a limited number of environmental cues to the exclusion of others of a subjectively equal or higher or more immediate priority, leading to an unsafe situation.

The commander showed two clear instances of channelized attention. The first was that he continued his aggressive turn, with a priority of keeping the aircraft close to the show centre (that is, to where the crowd would best be able to see the C-17) despite the low energy state which triggered the stall warner. The second is his response after the aircraft began to stall: he moved the control stick to the left but continued to hold the stick back and applied full left rudder, which meant that the manoeuvre to the left did not help and he failed to reduce the angle of attack.

Overconfidence doesn’t need defining, other than that misplaced confidence can be in your own skills but also in your colleagues or your aircraft.

The commander showed overconfidence both in his own skills and in the capabilities of the C-17. He taught that stall warnings were an “anomaly,” inaccurate and transitory, and to be expected during aggressive aerial demonstration manoeuvres. He was “not concerned” about stalling during the manoeuvres, because he believed that the stall warnings would stop when the turn was completed. He had flown numerous demonstrations with the stall warnings active and without incident.

Although this isn’t cited in the report, I think it is fair to say that the crew also showed overconfidence in the commander, accepting that he knew better than the text books, with a result of having an overconfidence in the C-17.

Misplaced Motivation is when an individual or unit replaces the primary goal of a mission with a personal goal.

The commander wanted to put on a good airshow, which doesn’t sound misplaced until he started to plan a compressed profile, using unsafe techniques, in order to impress the crowd and improve the airshow. The point of the profile was to demonstrate the performance of the C-17, not to demonstrate the maximum performance. However, the commander’s desire to put on a good show for the spectators led him to push the C-17 harder and harder, until eventually the flight went beyond limits.

Expectancy is when the individual expects to perceive a certain reality and those expectations are strong enough to create a false perception of the expectation.

The commander had consistently planned, practised and flown aerial demonstrations with the stall warning sounding during the 80/260 manoeuvre. He taught demonstration pilots that the stall warning was transient and could be ignored. So when the stall warner activated during the mishap flight, the crew responded as trained, ignoring the audio and tactile signs that the energy state of the aircraft was dangerously low. Everyone clearly believed that there was no actual risk of the aircraft stalling.

Procedural Guidance/Publications is when written directions, checklists other published guidance is inadequate, misleading or inappropriate in a way that creates an unsafe situation.

Air Force Policy Directive (AFPD) 11-2, Aircraft Rules and Procedures, para. 1 states:

“The Air Force establishes rules and procedures that meet global interoperability requirements for the full range of aircraft operations. Adherence to prescribed rules and procedures is mandatory for all personnel involved in aircraft operations.” (Emphasis added.)

Two years before the accident, in the Spring of 2008, the commander underwent “aerial demonstration upgrade training” and was recommended as a safety observer.

There, the instructor taught the crews that after take off, they should start lowering the nose at 1,000 feet while continuing to climb to 1,500 feet above ground level. The instructor also taught crews to make the initial 80° turn at a speed 15 knots above flap retract speed. He included use of rudder in his curriculum but also that there was no requirement to use the rudder in the C-17 for that manoeuvre. He taught that the profile as described in Air Force Instruction 11-246 for Air Force Aircraft Demonstrations should be considered PROCEDURE not GUIDELINES.

The commander of the fatal flight had clearly been trained in all these things but over time had lost sight of the requirements which were not reinforced by the documentation, which could be seen to guidelines which allowed him the opportunity to modify and vary the routines.

The mishap pilot violated regulatory provisions and multiple flight manual procedures, placing the aircraft outside established flight parameters at an attitude and altitude where recovery was not possible.

Furthermore, the mishap co-pilot and mishap safety observer did not realize the developing dangerous situation and failed to make appropriate inputs.

Command Climate

I’m pleased that the investigation didn’t stop there but carried on to look at the environment which enabled the pilot to plan and perform what a deliberately unsafe air show routine.

This had more to do with what we discussed on the first post, in which a highly experience commander carries such authority, that those around him don’t think to question him.

The commander had a stellar reputation, known as an excellent pilot, extremely precise and knowledgeable. He was highly respected by the squadron leadership for his aviation skills and his instructor abilities.

As a result, he was able to work independently with very little oversight. Over time, the US Air Force chain of command allowed him to modify the routine with little or no oversight because they trusted his judgement.

They also allowed him to change the checklist for demonstration flights without going through the process of having those changes approved. In fact, it was shown that in the 3rd Wing, checklists were often modified and put into use without being approved, directly in violation of Air Force regulations. The practice had become so widespread that no one gave it any further thought.

The Operations Group Commander responsible for supervising the aerial demonstration program was very interested in the C-17 programme. He had booked a flight with the commander to evaluate his performance but had to cancel when a last-minute emergency came up. It was never rescheduled. Another Operations Group Commander intended to observe the demonstration flights, but ended up busy with other things when the C-17 flights took place.

No one saw this as a big deal. His superiors simply assumed that the commander was complying with regulation and did not make any extra effort to review his techniques and performances. No one realised that as a result, the commander was operating with no checks and balances at all.

I’m sure the commander never made a decision to fly an unsafe airshow. Over time, though, the demonstration had evolved into a series of barely-safe manoeuvres, eroding the margins allowing for safe flight.

The commander’s overconfidence then led him to continue the turn during a low-altitude stall, with a crew who had been trained by the commander that the stall warning was not meaningful. As instructed, they did nothing to respond to the constant aural warnings, instead confirming that everything was “looking good” as the aircraft flew low and slow into the second turn. Neither the co-pilot nor the safety observer saw any cause for concern until the final seconds of the flight. Their reaction, or rather lack of it, happened because the commander was repeatedly and consistently allowed to fly unsafe aerial routines. It was normal.

Probable Cause

The board president found clear and convincing evidence that the cause of the mishap was pilot error. The pilot violated regulatory provisions and multiple flight manual procedures, placing the aircraft outside established flight parameters at an attitude and altitude where recovery was not possible. Furthermore, the copilot and safety observer did not realize the developing dangerous situation and failed to make appropriate inputs. In addition to multiple procedural errors, the board president found sufficient evidence that the crew on the flight deck ignored cautions and warnings and failed to respond to various challenge and reply items. The board also found channelized attention, overconfidence, expectancy, misplaced motivation, procedural guidance, and program oversight substantially contributed to the mishap.

Thanks to Mendel who found a copy of the report on Wikimedia Commons: 2010 Alaska USAF C-17 Crash Report

This is a clear case of normalisation of deviance, when people become so accustomed to cutting corners and pushing limits, they stop even noticing the deviant behaviour. Within the group, each individual’s ability to make decisions about risk becomes compromised as risk-taking behaviour becomes normal. Eventually, the risks taken are well beyond what any individual would have considered reasonable at the start.

To put it simply, it was just a matter of time until something went wrong.

The report talks in detail about causes, but has no recommendations to prevent a recurrence. The first thing that occurs to me is that pilots should be reviewed by people who don’t know them, and will mark off for bad procedure rather than letting the pilot get away with something because they’re known to be skilled; that would be inconvenient, involving bringing reviewers from some distance, but it might be useful in general.

I don’t remember seeing the phrase “normalization of deviance” used before, but I’ve probably missed it in other reports since I read rather than studying; the behavior shows up elsewhere, but I was most struck by the resemblance to https://fearoflanding.com/accidents/accident-reports/how-to-drop-a-gulfstream-iv-into-a-ravine-habitual-noncompliance/, in which the checklist processing had become more and more perfunctory. The people responsible for that crash were part of a relatively small operation; this incident makes clear that even a large hierarchy can get too loose.

And I wonder whether any of the people reviewed (or AFAICT trained) by this trimmer will themselves get reviewed more rigorously, to make sure that his bad habits don’t spread.

To be fair, military reports have a different format and do not include recommendations. I think it’s safe to assume that there was a widespread effect as a result of this crash and the report which showed that everyone from the ground up was culpable (modifying checklists without authorisation being commonplace in that squadron, for example). A situation like this builds up very slowly over time, so it’s incredibly hard to detect.

I tried to write up the Challenger crash, which is where normalization of deviance was originally coined — it’s again a case of small changes and requirements and cultural shifts building up over years until the risks taken went well beyond what any individual would have said was reasonable, at the start. It’s an incredibly insidious problem.

“The Challenger Launch Decision” by Diane Vaughan is the book that first popularized the idea of “normalization of deviance.” I’m reading it now and it is very illuminating.

Seems pretty straightforward, the pilot pushed it too far, and the copilot and safety observer were complicit. How is it they went along with the pilot? Are they trained to handle pilots who can’t or won’t follow procedures correctly? As Chip posted, what is being done to prevent this sort of thing from happening again? To me it’s clearly a training issue, the crew need to take responsibility and remove pilots who are unfit to fly.

They are trained in cockpit resource management but it is very hard to overcome the authority of someone that you know to be a better aviator than you are, who also holds the respect of command and is clearly an expert. In this case, he was also their instructor — and over time, when nothing went wrong, it would become easier and easier to disregard issues.

Sylvia’s summing-up needs no further comment actually, it is excellent/

It also subtly suggests the culture in an air force, which is different from airlines.

I once failed an evaluation for air force training.

I disengaged from a simulated dogfight because I could feel the approaching stall in the controls.

The other applicant was much more aggressive and got at my tail.

He passed.

I “lacked the will to kill”.

Thanks for the great report Sylvia – I’m currently in (EASA) ATPL ground school and the HPL subject actually deals extensively with the factors and crew attitudes mentioned in this article. A lesson of crashs like this one any too many others, I suppose.

Hi Philippe and nice to see you here! This one is pretty spectacular, to be honest, but human factors in aviation do seem to provide endless opportunities for analysis and studies.

An excellent and very comprehensive review Sylvia! It still leaves me wondering however. As I have said earlier, in any conventional aircraft, applying opposite aileron when stalling heavily banked merely seals one’s fate. How can this pilot (AND the Fairchild B52 incident pilot) not have known or practised this? Any suggestions from those more experienced than me? To NOT apply opposite aileron is counterintuitive but in these circumstances only training and/or practising the manoeuvre will ensure the correct control response. Where is the evidence of appropriate training?

An excellent report Sylvia. I’m still wondering however!

In any conventional aircraft, it is vital that opposite aileron is NOT applied when steeply banked and on the point of stalling! It will merely seal your fate!

As I said earlier, “the commander moved the control stick in the opposite direction, full left.” Where is the evidence of basic training? Why did he not know better?

Philippa may get something out of this. All major flight training institutes include human factors: study of sequences of errors that lead to crashes.

But do bear in mind, Philippa, that flying a regular mission is very different from display flying and the apparenty total acceptance of ignoring anything even resembling proper cockpit procedures is appalling in in this case. Especially major airlines in general are very intolerant of any, even the slightest, deviation of cockpit procedures. For a very good reason and it will all be brought to the fore during your training, Philippa.

I have been trained on the Aerospatiale Corvette by factory test pilots. One may think that these people just get up in a new type of aircraft , see how it goes and hope for the best. And yes, they do bring the aircraft insituations where it will be totally outside the envelope.

But they accept the risk BUT reduce it by very meticulous preparation. Everything they do in the air has been worked out in the finest detail together with engineers before they try it out.

The C17 crew also “tried it out”, but without fully understanding the potential consequences. Test pilots, on the other hand, do not deviate from the planned manouevre but instead will stick with it toeliminate risk insofar as possible and write their reports.

These pilots also have engineering degrees.

I know because there was no simulator for the Corvette, all our training was done in the air. And even though that meant that we were shown e.g. Mach buffet IN THE AIRCRAFT, I am absolutely certain that we were not put in any kind of danger.

Opposite rudder and aileron? Yes, I have done that intentionally.

In a Tiger Moth, practicing spinning.

Not a good idea in a large jet at low altitude.

PS Philippa, join this blog. I have retired long ago and one all sorts of things in airplanes, including stupid ones.

Sylvia has an excellent blog that can be entertaining, but also can enhance your understanding of aviation in what I hope will be a long and safe career.

Nobody seems to see my point!

The pilot of this C17 and that of the Fairchild B52, at a point where there was probably already no hope of recovery, nevertheless applied opposite aileron instead of neutral aileron. This is the action of someone who is ignorant of the fatal consequences of such action! This alone is indicative of seriously deficient training even if it wouldn’t have changed the outcome! Such a pilot should never have been in command of a display aircraft!

See https://en.m.wikipedia.org/wiki/PARE_(aviation) Even if not all directly applicable to a C17, NEUTRAL AILERON IS ESSENTIAL TO RECOVER FROM A BANKED STALL/SPIN ON ANY AIRCRAFT! Applying opposite aileron puts the inside wing into a deep stall thus exacerbating the stall condition!

Perhaps I should explain my point more fully!

If one is piloting ANY conventional aircraft (including a C17), in a steep right bank and approaching a stall, where one would normally apply left aileron to recover, in an incipient stall, the application of left aileron will fully stall the inside wing. This WILL result in the aircraft continuing to bank right. The ignorant or untrained pilot will then apply MORE left aileron thus exacerbating the problem. This is what this pilot did (and the Fairchild B52 pilot) ONLY neutral aileron and left rudder should be applied.

This is why I say these pilots were inadequately trained for displays (or anything else for that matter). All pilots should practice this at least in a simulator!

Steve, I think the point was not that he didn’t know how to recover from a stall but that up until the very end, he didn’t believe he was in one. So he didn’t want to stop the display and go into level flight, which he had of course been trained to do (if his training was that lacking, then that would have come up in the investigation).

Sylvia, I take your point but if I have understood correctly, one of his last actions was to apply full left aileron. Why would he continue do this if the aircraft did not respond as he would have expected? Sorry, I still believe he did not understand the situation he was in or how to get out of it! Had he realised, he would have hit the ground with neutral aileron applied. If he didn’t realise he was stalling until he hit the ground, that in itself says something about his training or the lack thereof! Anyway I have said enough!

In both incidents, being fixated on what you expect to happen (aka the plane completing the maneouvre despite the stall warning) would be a contributory cause. If the pilot thinks they’re not stalled, ailerons would work. And in both incidents, due to the low height above ground, if the pilot thought they were stalled, they’d know the plane was doomed anyhow. So all you can do in the situation is hope you got lucky another time.

The real lesson here is how hard it is to rein in that sort of behaviour when the reckless airman is considered a good pilot. The C-17 commander was a respected instructor; the B-52 commander was in charge of stan/eval, setting the standards! It’s tough to question the judgment of someone you consider to be a better pilot than you are.

Sorry, can’t resist one last observation! You list the following:-

“The operating manual gives the following steps for C-17 stall recovery:

apply forward stick pressure

apply maximum available thrust

return or maintain a level flight attitude.

Large rudder inputs, it says, should be avoided.”

This procedure does not explain how level flight is to be attained. It says avoid large rudder inputs but what about avoiding incorrect aileron inputs – much more serious!

I feel as if I am the only one on the planet who appreciates the seriousness of the problem of which I speak!

this was drummed into me from day 1 stall training. Level ailerons only and lively footwork.

This reminds me of a comment from the ValuJet crash report, which I believe you wrote about years ago – “Things can go wrong, but rarely do – and people draw the wrong conclusions”.

To me, beyond the poor supervision, the commander had taken the C-17 to the edge of its performance envelope enough he thought he was plenty safe – but in fact he was at the ragged edge, the “death corner” I’ve heard it called, where he was neutrally stable and a hair’s breadth from going too far.

This accident is yet another demonstration of the need to follow established procedures and performance limits as given for anyone who wasn’t involved in design and flight testing and who doesn’t know why the given limits are there.

While there are endless examples of pilots pushing their airplane too hard. and stalling out, another big-plane-at-airshow example is the 1995 RAF Nimrod crash at Toronto’s air show.

Language from the report could have came from the same template:

” As the aircraft passed 950 ft, engine power was reduced to almost flight idle, following which the speed reduced rapidly to 122 knots, below the 150 knots recommended and taught for that stage of the display. The aircraft was rolled to 70° of port bank, shortly afterwards reducing to 45°, and the nose lowered to 5° below the horizon. During this turn the airspeed increased slightly and the G-loading increased to 1 .6G. However, the combination of the low airspeed and the G-loading led the aircraft to stall, whereupon the port wing dropped to 85° of bank and the nose dropped to 18° below the horizon. Full starboard aileron and full engine power were applied in an attempt to recover the aircraft but, by this stage, there was insufficient height to recover and the aircraft hit the water.

The Inquiry detenmined that the captain made an error of judgement in modifying one of the display manoeuvres to the extent that he stalled the aircraft at a height and attitude from which recovery was impossible. The Inquiry considered that contributory factors could have included deficiencies in the flight deck crew’s training and in the method of supervision which could have allowed the captain to develop an unsafe technique without full appreciation of the consequences. “

I almost killed myself on my first sole practice of the climbing turn full power stall for my private pilot licence. I learned in a Cessna 152. I was very young, weighed only 130 lbs. The plane was only fueled for an hour of flight. That plane was light. I was told to practice at 3500 ft. I started a full power left climbing turn. The plane being so light, would not stall, almost hovering like a helicopter. I could not keep the ball centered. The plane started to skid left toward the low wing. The fuselage created turbulence over the high right wing. The high wing stalled. The plane snap-rolled into a right spin. The engine red-lined immediately. The ground was coming up fast. My instructor had told me, that if I ever got into a spin, idle the throttle, and take hands and feet off the controls. I did. The plane got out of the spin on its own, and was now in a very steep right turn dive. I applied left rudder. The plane was now in a straight deep dive. I pulled back on the yoke. The plane came out of the dive and returned to level flight while every rivet in the wings squeaked. I worried that the wings would pull off, but they held. I was at 500 ft when I was level. I had dropped 3000 ft just like that. i flew back to the airfield, examined the plane for cracks, found none, returned the keys at the front desk, and drove home. That episode taught me an important lesson: There are bold pilots, and there are old pilots, but there are no bold old pilots. I am now 80 yrs old. Thank you all for excellent comments on this tragic accident.

That must have been very frightening! Did you continue on to get your PPL? I think that might kill the love of flying out of most students!

Yes, I went on to get my PPL. I flew in national competition in Denmark and won second place in 1968. Then I immigrated to the US. Now I only fly occasionally as co-pilot with a retired fighter pilot friend. I read all crash analyses and learn from them. I lost a pilot friend who crashed his plane in July 2017. I believe that most airplane crashes are due to pilot error stall. Even the fatal Snowbird crash in Canada a few days ago was in reality due to a stall, because the pilot pulled way up after he lost engine power, then turned. He ran out of airspeed at the top of his climbing turn, stalled his high wing, went into a right hand spin, immediately recovered from the spin, but then was in a steep dive too close to the ground, so his last option was a late ejection. Tragically, his co-pilot died. Had he instead chosen to level off before he was out of airspeed, then glide with min. turns to a belly landing on best ground, both pilots most likely would have survived. It is unfair of me to second guess the pilot’s choice, but I flew glider in DK as a youngster, and i learned you can glide any plane to a belly landing, and most likely survive. In my book, the safest option at power loss is to immediately push the yoke forward, then glide straight with min. turns to a belly landing, even if the terrain is unfriendly. Too often pilots try to turn back to the airport, then stall in a low altitude turn with fatal result. If you lose power at high altitude, first set up your glide, then decide on a spot to belly land if needed, then try to restart the engine. The key is to plan your emergency responses in advance, before you take off. I apologize in advance to readers who think I have no business judging a pilot’s choice in a crisis.

When I bought my aeroplane – a Sky Ranger 3axis microlight, I hangared it at a small Highlands airstrip surrounded by pine trees. I was particularly exercised by the thought of what to do in the event of an engine failure on climb out. After some research I found a suggestion on the net as follows: At engine failure, immediately pull directly back on the stick and apply full rudder and full flap. The aircraft will rear up and turn on it’s axis 180degrees until it is heading earthwards thanks to it’s initial momentum. Almost a stall turn. I put this into effect on nine occasions starting at 4000ft and on the last three occasions got down to a simulated efato at 400 ft. I’m comfortable that I could have done this even at 300ft without any risk of a spin. The only downsides are that one might have to sideslip to reduce height sufficiently. It would only work with a light tailwind unless you have a very long runway. One would have to know there are no other aircraft following you up. I still wonder if this might have saved the aircraft and pax in the Hudson river incident!

Sounds to me like an aerobatic maneuver that is not suitable for regular private pilots, and not suitable for planes not rated aerobatic. That would also exclude the A320 that ditched safely in he Hudson. I would clear a path through the trees at the end of the runway, and sell that timber.

Yes, others have said that but it puts absolutely no undue stress on the airframe whatsoever and gave me a lot of comfort knowing I could be certain of getting back down alive! A lot of pilots have died landing in trees even though it’s generally a better option than trying to do a circuit with a dead engine! You can’t chop down trees that don’t belong to you!

Good write-up, but there was a LOT more to this story that wasn’t in the mishap report. The six C-17’s at Elmendorf are from two squadros.The 517th which is active duty and the 249th which is Air National Guard. The two “Associate Units” “share” the planes and it was a new and trendy thing circa 2008. The demo program belonged to the 3rd Op’s group (active) but they gave it to the Guard after the planes arrived in 2007. The Mishap pilot was the Guard guy and he was training and Active duty pilot to take over the program ( I THINK he was in Observer seat) to in addition to a practive sortie this was also a TRAINING mission, hence at least one of the other pilots was seeing this for the 1st time and considered warnings and cautions “normal” The lack of leadership oversight, while mentioned, doesn’t explain the reason for disconnect. The Demo program was and active duty program, the Guard took it as a favor (I guess) 3rd OG wasn’t looking because it wasn’t theirs and the Guard appreantly thought it an additional duty like Snack-o. The most interesting thing IMHO is while the M.P. was at fault this was a leadership failure at squadron and group levels yet not one commander recieved any public (and to my knowledge private) rebuke or punishment. I believe the lack of mention of the AD/Guard angle and lack of punishment was to not tarnish the reputation of the Associate Unit concept.

My neighbor is active pilot on the C-17 and told me about this accident. He attributed to the accident to the crew’s equal and unhealthy “power gradient”; i.e., the presence of equally ranked pilots, with the Pilot Flying convincing the other equal that his way is the one way.

This causes the other pilot to close shop on his own instincts and replace that with dogmatic trust in the pilot flying. It’s about the worse kind of resignation: to lay down your situation awareness, and tunnel-vision to do only your little part of the job.

I succumbed to equal power gradient gone wrong a year ago, and it cost a pilot his job and a new career at a job that gives misery.