What Is Going On With The Boeing 737 Max 8?

The news is dominated right now with the grounding of the Boeing 737 Max 8 after a second fatal crash of a passenger jet which could be related to the onboard systems. There’s a LOT of questionable information out there and many of the explanations presume a certain level of aviation knowledge. I’d like to cover some of the basic issues to the best of my abilities and to invite you to comment below, either with questions or with answers, to help unravel this mess into key parts.

First of all, let’s take a brief look at the two crashes.

Lion Air flight 610 crashed on the 29th of October 2018 shortly after departing Jakarta for a passenger flight to Pangkai Pinang with eight crew and 181 passengers on board. The preliminary report was released a month later and I summarised the sequence of events here. The key points at the moment are that the aircraft suffered from a faulty Angle of Attack (AoA) sensor and the erroneous AoA data triggered a system known as MCAS, the Maneuvering Characteristics Augmentation System, to command a nose down stabilizer trim. We’ll get into this more in a moment but the important point here is that the AoA sensor offering bad air data and triggering the MCAS to pitch the aircraft down has already been established. Exactly what else went wrong during that flight is not yet clear and the investigation is still ongoing.

Ethiopian Airlines flight 302 crashed on the 10th of March 2019 shortly after departing Addis Ababa for a passenger flight to Nairobi with 8 crew and 149 passengers on board. There was immediate concerns that the flight shared similarities with the Lion Air flight 610 crash five months earlier.

In both cases, the aircraft was the Boeing 737 MAX 8, an aircraft which has only been in operation since May 2017. Both fatal crashes took place shortly after take-off, in clear weather during daylight hours, which is a circumstance under which it should be easy to fly manually back to the airport for most aircraft faults. Both aircraft seemed to struggle during the climb and never reached their assigned altitude.

In both cases, the crew reported a problem to ATC and requested an immediate return to the departure airport. Both of the aircraft had pitch control issues: Lion Air flight 610 descended and climbed repeatedly before crashing and preliminary data shows that Ethiopian airlines flight 302 may have done the same.

The Lion Air aircraft had a faulty AoA sensor which was causing bad data and the Flight Data Recorder (FDR) showed false airspeed indications; the Ethiopian crew reported unreliable airspeed indications shortly before the crash. In both instances, the crew should have been able to manually fly the plane back to the airport (and in both instances they stated their intention to return) but were unable to understand the issue or unable to regain control of the aircraft. Both crashes were ‘high energy’ which means that the aircraft was flying towards the ground at high speed.

I’d like to focus on the first factor, the Boeing 737 MAX 8, to understand how we got to where we are.

The Boeing 737 was originally designed in the 1960s as a short-range twin-engine lower-cost alternative to the mid-sized 707 and 727 airliners. The 737-100 entered the market targeting airlines with short-haul routes from 50 to 1,000 miles, seating just 50-60 passengers. Over time, the 737 design was revised to allow for an increased range and passenger capacity, including larger engines and a longer (“stretched”) fuselage, while remaining economical. These designs, up to the -500 series, are now known as the Classic 737.

In 1991, Boeing shifted their development to the 737 NG (Next Generation) to compete with the Airbus 320. The wing was redesigned, with the area increased by 25%, the engines were upgraded, and the range was increased to over 3,000 nautical miles, which meant that the 737 was now a transcontinental aircraft. The 737 NG includes the -600, -700 and -800* series.

- and -900 of course, my mistake!

In 2011, Boeing announced a new 737 version using the CFM International LEAP-X engine and offering various aerodynamic improvements and modifications. This variant is known as the 737 MAX which performed its first flight in January of 2016 and the first delivery was a MAX 8 in 2017. A feature of the Boeing 737 MAX aircraft is the Maneuvering Characteristics Augmentation System (MCAS) which is intended to prevent stalls.

Automation to prevent a stall is not a new concept. Airbus introduced computational modes, known as flight control laws, to determine the operational mode of the aircraft and ensure that aircraft limits were not exceeded in the Airbus A320 in 1988 (see also Air France flight 296). Boeing followed suit with the Boeing 777 in 1995. However, in this particular case, the MCAS is not just to assist the pilot but is required by the 737 MAX design. The engines are larger (and more fuel efficient) and needed to be moved slightly forward and higher up to keep them out of the way of the landing gear. The stretched aircraft with the repositioned engines and extended nose gear had much better fuel efficiency but the modifications also changed the handling characteristics. Specifically, the new 737 MAX showed a tendency to pitch up and so the MCAS ensured that if the Angle of Attack was too high, the nose would pitch down gently, supporting the pilots in avoiding a stall. What’s new about this scenario is that we have a commercial aircraft whose hardware design requires a software fix to keep the aircraft stabilised.

The Boeing 737 Technical Site maintained by Chris Brady maybe explains this better.

MCAS was introduced to counteract the pitch up effect of the LEAP-1B engines at high AoA. The engines were both larger and relocated slightly up and forward from the previous NG CFM56-7 engines to accommodate their larger diameter. This new location and size of the nacelle causes it to produce lift at high AoA; as the nacelle is ahead of the CofG this causes a pitch-up effect which could in turn further increase the AoA and send the aircraft closer towards the stall. MCAS was therefore introduced to give an automatic nose down stabilizer input during steep turns with elevated load factors (high AoA) and during flaps up flight at airspeeds approaching stall.

The key thing to understand is that if the angle of attack becomes too high (the critical angle of attack is exceeded) then the aircraft is at risk of stalling, which means that it no longer has enough lift to continue flight. In any aircraft, the pilot’s default response to an impending stall is to pitch the nose down and add thrust. In Airbus and in Boeing, there are automatic systems which do this for the pilot in order to ensure that the aircraft does not enter a dangerous stall.

For the MCAS to activate, the following must be true:

- The Angle of Attack (AoA) as read by the sensor exceeds a specific parameter (specifically, the sensor shows a high AoA which means that the aircraft is approaching a stall condition)

- The flaps are fully retracted (which means that the aircraft is not/is no longer in take-off or landing configuration)

- The aircraft is being flown manually (the autopilot is not engaged)

If these three things are true, then the MCAS will trim the aircraft stabiliser nose down for a maximum of 2.5°. The nose down stabiliser trim movement will last up to 10 seconds. It can also be interrupted by the flight crew by using the electric stabiliser trim switches, which means that if a pilot manually changes the trim of the stabiliser, MCAS will stop attempting to control it.

The MCAS will not continue to increment the stabiliser trim under normal operation. However, if the MCAS has been reset (for example by a manual trim command) then if the AoA is still high after five seconds without pilot trim command, it will activate again. The MCAS can and will continue to pitch the nose down in up to 2.5° increments if the high AoA reading has not been resolved. In this way, it is possible for the stabilizer to reach the nose down limit, pitching the aircraft down as steeply as it can.

In the case of erroneous AoA data, as has been confirmed in the Lion Air flight, the STAB TRIM CUTOUT switches must be moved to CUTOUT. If the pilots continue to only use the electric stabiliser trim switches to counteract the unwanted trim inputs, they are simply resetting the MCAS, which will activate again in five seconds if the false AoA data continues to show that the aircraft is in danger of stalling.

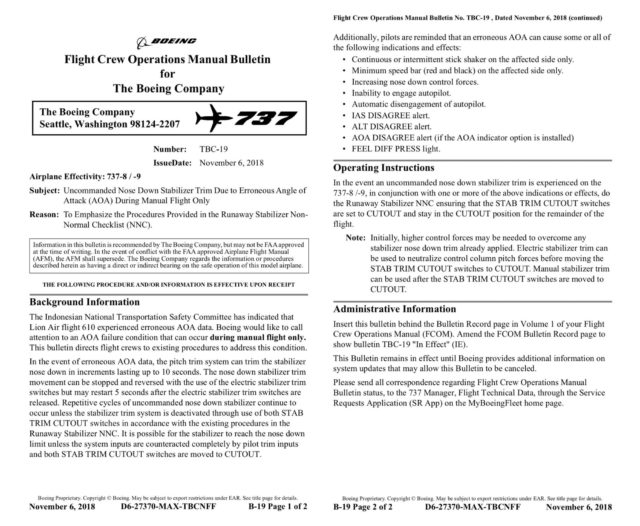

This information was released as an urgent bulletin to all 737-8 and 737-9 pilots after the Lion Air crash.

And that’s a core political issue here: although the procedure was not new, pilots of the 737 MAX aircraft did not know about the Maneuvering Characteristics Augmentation System or that it was a required aspect for the certification of the 737 MAX-8. This was a decision taken by Boeing on the basis of not inundating pilots with unnecessary technical details, as they saw MCAS as being an “under the hood” issue that they didn’t need to understand for safe operation of the aircraft.

Now many pilots feel very strongly that the system should have been covered in training and the operations manual and this is an ongoing discussion. However, the truth is that under normal operation, the MCAS is not doing anything particularly unexpected.

Even under extreme circumstances, for example if a faulty AoA sensor is showing a high AoA when the aircraft is climbing normally, it should be relatively easy to deal with, especially in clear conditions in sight of the ground. The aircraft begins to pitch down, the MCAS input is countermanded by the flight crew and the STAB TRIM CUTOUT switches are moved to CUTOUT in order to disable further interference. Theoretically, these reactions needed no additional training as they were already listed in the aircraft’s Runaway Stabilizer Non-Normal Checklist.

However, it’s clear that in the case of the Lion Air flight 610 crash, things were not as straight-forward as they should have been. The AoA sensor was faulty and this triggered the MCAS to initiate a nose-down attitude on the aircraft. As far as we can see, the MCAS repeatedly trimmed the aircraft nose-down and the flight crew were not able regain control of the aircraft. It is still not clear how and why it went so far wrong.

Last week’s Ethiopian crash shares some of the same traits: bad air data and an apparent struggle to control the pitch of the aircraft. In this case, the flight crew would have known about the Lion Air Crash and understood exactly how the MCAS trim inputs could be disabled. And yet, again, the flight crew lost control of the aircraft and crashed into the ground.

As of the 14th of March, the Boeing 737 MAX 8 and MAX 9 aircraft have been grounded, which in itself is an unexpected result at this stage of the investigations. Many aviation authorities around the world grounded the aircraft before the two accidents had been definitively linked and gave no justification for the grounding, in a move that has been referred to as primarily a response to social media. However, that initial response has since been justified as yesterday, the FAA announced that there is new evidence which does link the two crashes, resulting in an emergency order of prohibition against the operation of Boeing Company Model 737-8 and 737-9 by US certificated operators.

The former NTSB chairman, Christopher Hart, spoke to CNBC about this decision.

This is uncharted territory. We have never before in the US had a grounding for any reason other than a catastrophic mechanical failure that the pilot could not fix in the moment. The two groundings that I’m thinking of were the DC-10 in Chicago when the engines separated from the wing in 1979 and the Boeing 787 in 2013 when they were having lithium ion battery fires. Those are events that are disabling to the airplane, there’s nothing the pilot can do about it in the moment, and those were obvious reasons for grounding.

The point he is making is that there have been multiple instances where the Boeing 737 MAX automation has failed where the flight crew has responded to the problem correctly and landed the aircraft without further issue, including the Lion Air flight into Jakarta before the flight 610 crash. However, there is no real data on this and it is hard to believe that a competent flight crew in clear conditions would not be able to disable the trim and return to the airport. Until we have a better understanding of what happened in both of these crashes, it is very difficult to judge the necessity of the grounding of the aircraft.

The current situation obviously has the attention of airlines and aviation authorities around the world so I would expect updates on both crash investigations in the near future. Hopefully the reading of the cockpit voice recorder and the flight data recorder can help to shed some light on the Ethiopian crash quickly.

Generally the comments are a good place for robust discussion but I want to remind everyone that this is something of a political football and an emotional situation for many people and so perhaps a bit of care is needed when discussing the rights and wrongs of the airline, the flight crew and the aviation authorities.

“we have a commercial aircraft whose hardware design requires a software fix to keep the aircraft stabilised”

Isn’t that where it all started going wrong?

RIP to all.

That’s a key question, isn’t it… But there’s a few aspects here, including that if the AoA sensors disagree, is that logged on the FDR? Because if so, then shouldn’t it be highlighted in the cockpit as well?

I’m slightly uncomfortable with the idea that a system like MCAS is required for the aircraft the be certified in the first place. And if the response to unexpected behaviour of the system is to pull a circuit breaker to disable it, where does that then leave you, manually flying your unstable airframe with part of its necessary software disabled? Not only that, I would expect issues with such a system that require it to be disabled to be few and far between, yet in the space of a few months there are at least four cases that I’m aware of (assuming ET302 turns out to be MCAS-related). It feels horribly like someone has dropped the ball on this.

I think this is Paul’s point as well. On the other hand, developing a new aircraft has its own risks and it would seem to be safer to stick to modifying one that has been tested inside and out with a minor change that we know works (automatically trimming down as a response to AoA). There’s clearly some sort of interaction happening that they had not considered or tested for and I think (I hope!) that the focus of both investigations will be on how the situation has arisen in the first place.

I’m not a pilot, rather a software engineer who has worked on a number of safety critical systems. As part of trying to do this right I’ve studied a wide range of control system failures, from the Therac 25 radiation therapy unit to Chernobyl. As with many air crashes, system design rather than operator error is often the critical element.

For a full understanding we’ll need the final report, but having looked at the interim report on the Lion Air crash https://reports.aviation-safety.net/2018/20181029-0_B38M_PK-LQP_PRELIMINARY.pdf it seems pretty clear that the MCAS system have some very unattractive characteristics.

If you look on p14 of the report, you can see the repeated automatic trim downs, followed by manual trim ups. This then apparently resets the 2.5deg limit on auto-trim, and allows another automatic trim down…

Because the pilots never disable the system (reasons not currently known), the repeated auto trim down accumulates enough trim down that after 7minutes it exceeds the range which can be compensated for via up elevator. At that point the plane enters an uncontrolled dive.

Clearly the input design is poor (taking this kind of action on just one AoA sensor, rather than a vote on three separate channels), and an explicit audible or visual warning of excessive trim down would be helpful, but the central problem appears to be that while single trim adjustments have a 2.5deg limit, this limit is reset on any manual adjustment, and can then accumulate without limit.

It is very hard to see why it can be ever justified to auto-trim to the hard stops, exceeding the elevator control. This kind of behaviour seems very suggestive of late changes without sufficient design consideration…

It would also be very interesting to know the point at which the MCAS function was added. Having two AoA sensors make perfect sense if they were only originally intended to provide warning/info for the two flying seats. If they had originally been intended for control purposes, you would have expected 3 with voting. It suggests that attaching the MCAS to the AoA for control may have been a late addition, where they didn’t want to undertake the redesign required to include a third sensor…

Colin, this is really useful, thank you very much for that breakdown which explains the accumulated 2.5° adjustments.

I don’t know how to find out the point at which the MCAS function was added, other than “before it could be certified” but someone has looked into the AoA sensors and confirmed they have the same part number as for the 737 NG, so there’s not a change or higher failure mode there.

I’m pretty certain the “standard” part was used, but what it was used for might well have changed.

Originally it might have just been for warning generation, where two sensors, one for each seat would be fine. No real problem if one generates a stall warning and one doesn’t.

Once the automated MCAS behaviour was hooked in, the right thing to do would be add a third sensor, have all three sensors handled via independent channels, and then have a vote at the end to decide which two to trust. It looks plausible (I’m speculating) that the MCAS automatic trim was added late in the day, and rather than add additional AoA sensors, they just decided to tie into one.

The consequence of an AoA sensor failure then goes from a spurious warning, to a situation where if the correct action is not quickly taken, the plane is heading for the ground… This is made much worse by the lack of an overall limit on the amount of trim down.

You might also question the decision to add the input via trim, when in reality the system is trying to override the pilot. If the input had been added via force on the stick/yoke, then normal input would be the MCAS trying to pull the stick forward (does this happen in other cases?), and “override” would simply be the pilot pulling back harder.

Instead, by adding input via repeated trim commands, I assume (pilots please tell me) what would normally be a very occasional action (manually altering trim) becomes a primary flight input, and to override it you have to totally disable the electronic trim, and then manually compensate for whatever trim has been added to date.

For an error path this seems dangerously complex. I’ve seen comments about existing procedures for “runaway trim”, but I assume this would typically be a one-off hard error, rather than the system repeatedly overriding pilot input?

Are there any other commercial aircraft that have relaxed stability? Is the inclusion of the MCAS extraordinary?

Jerry, if I’m understanding your question correctly, you mean are there other commercial aircraft which require this kind of software fix in order for the aircraft to be certified. As far as I know, the 737 MAX is the only one but I’m not sure how to find out for sure.

Ok, I am old and have only ever had a sailplane license. But I have read all of Sylvia’s books and many others on accident investigation. I am not at all an expert but I have picked up a few things. Should we not be punting basic airmanship? If a system fails, hit the big red button and hand fly the aircraft. Use backup instruments, if necessary. Analogue ones, with little circular dials. Independent of those controlled by software. Am I being naive in today’s aircraft environment? I think of the Air France accident where the pilots had no clue as to what was going on. More training on systems is clearly essential but maybe more on basic airmanship. Feel free to shoot me down!

In the Air France 447 accident, the pitots were blocked. The pilots thought they had airspeed when they were in a stall. An extra blocked pitot driving an analogue air speed indicator would have made no difference.

The problem is that we don’t know what was happening in the cockpit or what symptoms the flight crew were seeing. It’s not just that you have to hit a big red button, you have to work out *which* of the big red buttons will make things better and which might make things worse. If they could have gotten the autopilot on, for example, the MCAS would have been disabled…but you can’t put the autopilot on while you are pulling back on the controls trying to work out why your aircraft just pitched nose down. And until recently, there were no AOA sensors at all, there’s no analogue equivalent that you can use. If you look at Aeroperu 603, which I’m sure is around here somewhere, you can see just how quickly it can become critical that you not do the right thing — if there’s faulty airspeed data, then making sense of what is true (in the dark, over the sea) is very difficult to achieve other than if you *really* know your plane and all its quirks.

So yes, there is almost certainly an issue with training and airmanship in these two incidents but its difficult to gauge how serious a lapse there was without understanding what appeared to them to be happening.

(in the recent case, I’m wondering about a flaps up landing, for example, but until more data is released, I can’t justify that other than the initial emergency call happened below 1,000 feet and if it was caused by the MCAS trimming, then the flaps must have been up)

I am struggling with this one. I have never flown the 737, let alone the Max.

Colin seems to be on the righ track. Isn’t this telling: A software engineer (and no, I do not intend this to be in any way patronising or derogative) can make some sense of this where people with flying experience cannot?

The 737, as Sylvia explained, started life as an aircraft in the era when the DC9, the Caravelle and the BAC 1-11 were still mainstream and the Trident at the pinnacle of advanced technology.

The Caravelle has made way for other more modern aircraft and the current replacement in fact is the Airbus A320. A t.otally new concept

The DC 9 ended its life as the Boeing 717.

The 1-11 and Trident have been withdrawn. I don’t know if any 1-11 are still around, probably not.

The 737 continued. I just wonder: has it been overdeveloped?

The amount of artificial gizmos that keep the type alive seems excessive.

Add to that the fact that in modern aircraft the systems need more and more attention and if something does go wrong that is not properly understood the crew do not have the basic flying skills. “Seat-of-the-pants” flying is becoming increasingly secondary to being systems managers. But in some cases the systems can overwhelm the pilots. Especially when they give conflicting information the crew can get into “overload” mode.

AoA information in my time was an additional source of information, not something that could take over control from the pilots when it was malfuntioning. That would defeat the purpose.

In the ’70s pilots called the DC7 “the best three-engined aircraft”. The engines were massive: radials with many banks of cylinders and a “turbo-compound” system that could feed power from the turbochargers back to the drive schaft. An over-developed piece of highly advanced engineering that, because of its complexity, was no longer as reliable as previous generations.

I just wonder if there is a parallel with the 737 max? A great piece of engineering that has been developed beyond reasonable limits?

Much of good software design is about handling error cases, but for safety critical software it is central.

With something like MCAS you are trying to “improve” on the pilot’s skill in a deliberately “transparent” a.k.a. “hidden” fashion.

To do this without being upfront in the manuals and training seems disingenuous, whilst not providing redundant sensor data, clear warnings,or sensible limits, may well have cost the lives of hundreds of people.

I loved working on cars of my childhood where everything was mechanical, and I stood a fair chance of fixing them Today’s computer controlled cars are however more reliable, more fuel efficient and for most people much easier to drive. The downside is I have to have an interface pod and piece of special software to figure out why the car goes into limp-home mode with an engine warming light on. I can cope with that, but for 99% of people it has closed out access to serious car maintenance. I even have to recode the system to fit a new radio!

I suspect most computerization to date has helped improve safety, but as we stray into second guessing of pilots, as software engineers, we have to be much more careful and much less arrogant. I think the 1988 airbus airshow crash may have started this trail of software accidents, but sadly many more to go….

Colin,

I am not so certain about a few things you bring up here.

“Easier”? Maybe as long as nothing goes wrong. And yes, when the systems do their job, and the back-up gizmos give the correct information then indeed the job will be easy. Like, if an engine or system fails the pilots will be presented with the problems one by one, in order of urgency.

It seems to me that the MCAS system, however, has the potential of presenting the pilots with conflicting input and even can override the pilots.

This is not something that will be solved in a hurry. There are too many complexities involved.

The A320 incident in 1988 was not of the same order.One of my former co-pilots had been converted to the Airbus and of course these pilots were given the entire scenario in order to understand what happened – and of course to prevent it ever from happening again.

The aircraft was in fact fully serviceable, it did exactly what it had been programmed to do. Certain parameters are part of the configuration, according with what Airbus refers to as “laws”. The electronics will compare what is happening and the pilots’ demands with the parameters. Anything that falls outside will be outside of the “law” and the aircraft’s electronics will set a protective envelope to ensure that everything will stay that way. Of course, the law will change in case of an abnormal or emergency situation.

Simply: The crew were experienced pilots but had only recently converted from the B737 to the A320. After all, it had only recently been introduced.

They were meant to do a fly-past with gear up and flaps retracted. If the pilots then pull the sidestick all the way back, and bring the power to idle, the aircraft will assume a ridiculous looking pitch-up attitude but will not descend below, I think, 1000 feet AGL and the engine power will automatically increase to keep the aircraft dlying at 1000 feet without stalling.

Only, the crew were not totally familiar with the airport. Airshows have very precise time slots. The aircraft came in a bit too quickly. In order to slow it down the crew lowered the wheels. I can’t remember if they extended flaps as well. But by doing so, they changed the “law”. Wheels down equated permission to descend below 1000 feet. Engines at idle or very low power, high angle of attack meant high drag. By the time the crew relalised their error, the engines first needed time to spool up, then more speed was needed to climb out of danger. By that time it was too late.

Insofar as I understood it, there was nothing wrong with the aircraft. It did what the crew programmed it to do.

But I admit that I am trying to parrot what an A320 pilot told me.

I did a few sightseeing flights with the Fokker 50. A similar protection was built into that type. We cruised at 1000 feet. If we descended below it, in “clean” configuration, a horn would sound. OK, the aircraft did not have systems that would take over but still…

By easier, I meant that some functions (better autopilots, automated landing systems for limited visibility, etc.) have improved overall easy of flying, in a similar way that ABS braking and navigation systems have improved driving.

I 100% agree that where you move into much more difficult territory is when the computers start overriding pilots (such as here), rather than doing specific requested tasks.

As for the A320 crash, it was simply the first one that struck me as having computer control as a key element. That was just as a non-pilot lay person, and I 100% agree that this case is in many ways more serious,

I am a retired software engineer; I never worked on programs that controlled real-world mechanical devices, but everything colintd says makes sense — particularly the majority-of-three design, which is established enough that it should be easy to code properly. (IIRC the Apollo Lunar Lander actually had FIVE computers caucusing during the landing.) I also have far too many bitter experiences with quick “fixes” for problems to trust the work of any one software engineer; I would hope that Boeing developed this real-world-critical application with a committee containing both software engineers to propose designs and QA engineers to poke holes in them. Even better would be a committee of people who have a serious grounding in the world that the software will be applied in; I wonder whether Boeing encourages their software people to become pilots, or instead treats embedded software as so difficult/specialized that its writers and testers aren’t expected to have experience in the devices they’re developing for.

I do wonder about the certification process for the Max 8; patching a hardware problem with software strikes me not just as dodgy in principle but as something that aircraft certification personnel may not be qualified to judge — if they were even allowed to look at the code. I also wonder about whether the certifiers should have required that the permitted CG range be changed to reduce the problem (wrt the Chris Brady quote), but I don’t know whether it would have helped, whether it was possible, whether it would have reduced the efficiency — or even affected the handling — of the plane in more typical flight.

One factor I don’t see discussed here: I recall reports in the mundane press that the pilots of the Lion Air plane had previously reported pitch issues that did not result in repair orders (possibly on a previous flight that day). Did anyone else see such reports? It’s easy to say “Well, those were third-world airlines with shoddy maintenance standards” — but given today’s economic drives, I wonder how many first-world airlines would have taken a plane out of service based just on pilot reports, especially of something that seemed less critical. (i.e., if the oscillations had injured passengers the airline might have been more responsive — or it might just have grounded the pilots and assumed that the aircraft was good to fly).

“The 737 NG includes the -600, -700 and -800 series.”

There’s also the -900, isn’t there?

Er, yes. Oops? I think I just got tired of listing numbers by then. Thanks for that, I’ve fixed the main text.

Yes, all the numbers do get confusing. As a last one perhaps: I heard an expert who asserts that pilots converting to the “max” did not get anything about the MCAS in their training. Is the “Max” just covered as a Boeing 737 type rating, requiring only some differences training? When Fokker introduced the Fokker 50, it was originally a new variant of the F27, if I remember well called the F27-50 or something like that. It was decided that there were substantial differences between the two: engines, systems, engine- and propeller management and quite a few more. By officially changing the designation to “Fokker 50” it became a new type, and required a separate type rating. And that, in turn, required a full type rating. I don’t know if and how the progressive development of the 737 kept proper pace with training crew for the very substantial changes that have been introduced since the -100. Remember the Kegworth crash? The pilots were relatively new to the CFM engines and were fixated on a vibration indicator that was differenbt and had not the same level of urgency as in the older model with low bypass engines.

Anyway, it seems that the CVR proved that the pilots were busy trying to find a solution for the MCAS giving unwanted pitch-down inputs. Apparently they were leafing through checklists and manuals. The CVR of the Lion Air flight has not yet been found, so it is quite possible that these pilots too were desperately looking for a clue in the emergency checklist.

Yes, the 737max is grandfathered in on the old 737 rating, requiring only a differences training. That is basically the reason for the MCAS: the desire for Boeing to keep it flyable by 737-rated pilots, leveraging airline lock-in, and avoiding the years of engineering it would take to get a new rating for the plane, in the face of Airbus competition.

The 737max received bigger engines, mounted further forward to meet ground clearance restrictions, and thus having more leverage in a pitch-up situation where the air pushing on the engines from below would add more lift forward of the center of gravity than on previous models. This means that the moment where a pilot lets go of the stick and the plane keeps pitching up happens earlier on the Max than it does on the NG, and the angle where the plane keeps pitching up with the yoke full forward is also lower: this happens more quickly after the stall warning.

So Boeing compromised: they added MCAS to automatically trim the plane against this extra lift, reasoning that this system means pilots don’t have to consider this aerodynamic difference, and therefore they can still use their 1960s 737 type rating on this modern plane, and the FAA consented. For the same reason, the AOA disagree alert is optional, because if it were mandatory, that would be admitting that the plane had changed too much from the original 737 to be certifiable on the old rating.

This compromise is the reason we’re seeing backlash against the FAA right now.

Very well put and explains nicely how this situation arose. Thanks for taking the time to write it up.

Look at the bulletin and the list of symptoms that can occur in this scenario, including “IAS disagree alert”. You write, “the Ethiopian crew reported unreliable airspeed indications shortly before the crash”. So, the stick shaker is rattling, you think your airplane is about to stall, you apply power to pick up speed, and miss the trim wheel ratcheting the nose down because the one alert that would help you, the “AOA disagree alert”, is not installed on your plane.

On the LionAir plane, four times crews diagnosed this problem correctly, but not the fifth time. If this was a safe procedure, its success rate should be 100%. It’s a hole in the cheese, it shouldn’t be there, and to say “the crew should catch it” means that when the crew is fatigued or mismanaged or anything else goes wrong, the holes line up, and the failure occurs.

And we don’t know that the electrical trim cutout switch even worked. Many aging software systems accumulate cruft as they are patched and patched again. That no bugs surface in testing doesn’t mean there aren’t any. We assume that the cutout switches worked because in every case where the MCAS activated erroneously and the plane didn’t crash, they did work, but what evidence do we have that the crews who crashed had working switches?

There’s still a lot of detail missing and I’m very hopeful that by looking at Lion Air and Ethiopian as a pair, we’ll be able to see more about what specific holes lined up to lead to this, whereas as you point out above, knowing whether you can trust the the AoA sensors went from ‘additional data’ to ‘critical information’. I don’t think we’ll ever get to a point of no holes in the cheese but the disagree sensor and knowledge of MCAS could well stop them from lining up.

I watched a YouTube video where they put pilots in a 737 Max simulator and recreated the faulty data that sent it into runaway trim mode.

It was horrifying! The trim wheel spun so quickly an the plane started to point straight down. You can see why, in the heat of the moment, it can cause panic.

To design an aircraft with an undesirable flight characteristic, increased pitch up with application of power, and then to “correct” this in software flies in the face of logic. I’ve worked in aviation and in IT and the way of doing things is much different between these two fields. In IT, it’s not uncommon for their to be revisions as software is improved and lessons are learned from real-world experience. However, when software can interpret, and in some cases, override the control inputs of a pilot, “lessons learned” can involve lethal accidents.

Let’s start with the A-320 accident in France. While, indeed, the plane did interpret the pilot’s actions and prepared to land, as I recall, it ignored the pilot’s immediate commands and settled into the ground while the pilot’s tried in vain to prevent the crash. The plane “protected” itself from a stall but, because FCCs are in no sense of the word conscious, it didn’t realize that there was a much greater danger, that of landing off-field. Had the pilots been allowed to trade airspeed for altitude, there may have been time for the engines to spool up and the crash may have been averted.

That particular crash had so many things wrong in the planning that it sickens me. Hot dogging a low flyby with a plane full of passengers is a bed idea on many levels. Performing such a maneuver with a disabled child (hence, unable to evacuate) onboard is unconscionable. Still, for my sensibilities, this comes down to one question; who is in command of the aircraft?

Being licensed both as a Private Pilot and an A&P, I am familiar with the principle that one has to be willing to put their hide on the line. If I’m not willing to fly in an aircraft I returned to flight status, that doesn’t say much for my confidence in my own work. As a pilot, even a private pilot, I will be the first to arrive at the scene of any accident, so my hide is on the line. But what about an aircraft where software can override pilot commands? Should the development team be required to participate in the risk that results from programming decisions which have the ability to override pilot input?

At this point in the discussion, almost inevitably, the argument will be made that these systems are safer overall than non-automated systems, but I feel this misses the point. When it comes to my personal safety, I want human consciousness involved and not some algorithm which takes its input from a set of data predetermined by the programming team.

I cite QF72, where an Airbus made an uncommanded control input which injured a number of passengers and rendered the sidestick useless. The machine made an error and summed two responses resulting in a control movement that was excessive. People were

injured, including a flight attendant whom was badly injured. For me, this comes back to the same question; who is flying the airplane?

So, when Boeing made the decision to modify the 737 airframe amps use the MCAS system as a hedge against pitch-up when engine power was added, they took a major step into the middle of this issue. In my personal opinion, it was a step in the wrong direction. There are well over 300 lost lives which I will cite as testament to this conclusion. Boeing is not perfect, but they have an impressive track record and have survived beyond numerous domestic competitors in the transport field. The 737 Max may put some serious dents in their armor, however, sepending upon how this plays out over time. I have little doubt that the 737 Max will return to service but the story doesn’t end there.

If there is one more crash where MCAS is implicated, it’s likely that it’s all over for that product. Orders will be cancelled and we could very well end up with the situation of the DC-10, where people refuse to fly on a type which has a reputation for being unsafe. OTOH, if Boeing were to choose to develop and entirely new airframe to replace the 737 they would effectively shut down sales of that segment until the new plane was ready to market.

The aviation industry, as a whole, faces a very real and intractable problem. Automation has definitely made things better in many ways, but it has also created an ethical dilemma with regard to the ultimate control of an aircraft. Who has the final word with regard to the flight controls: the FCC or the Pilot Flying? What should be done when the pilot commands a climb but the autothrottles decide to go to flight idle because they think the plane should land?

I just hope that the leaders of this industry are taking these matters very seriously.

Forgot to click the checkbox for notifications.

The Max story may be thought of as sabotage carried out by concepts, by an understanding of human thought that surpasses our experience.

How could Boeing put a system on their Max that violates everything they had learned about building airplanes since the beginning of Boeing? It seems that every principle for safe flight is violated by MCAS. Then the CEO is telling us it’s safe when it obviously isn’t.

Pilot abuse by computer, death by software, and it’s not limited to Boeing. I’m inclined to say: late Boeing.