NTSB Summary: Assumptions Can Be Fatal

Yesterday the NTSB released a Safety Recommendation Report regarding the recent Boeing 737 MAX crashes and subsequent grounding of the aircraft.

I am particularly interested in this report in the aftermath of two in-depth articles that came out recently. The New York Times published William Langeweische’s What Really Brought Down the Boeing 737 Max. As an interesting bit of context, Langeweische’s father was the author of Stick and Rudder, considered one of the core books on piloting (and yes, I have a copy and I love it). As you can guess, the article swiftly absolves Boeing, blaming the loss of basic airmanship in our current crop of pilots as the result of automation.

Meanwhile, the New Republic released Maureen Tkacik’s How Boeing’s Managerial Revolution Created the 737 MAX Disaster. This piece focuses on Boeing’s management culture and the requirement to please shareholders, at the expense of the advice from the engineers.

Now honestly, both articles are interesting and both included a lot of detail and background that was new to me. But also, both of them infuriated me with bad assumptions and logical conclusions that were very questionable. Although the articles are, in many ways, polar opposites (one blaming airmanship and the other blaming the corporate world) I felt strongly that both authors had an axe to grind and glossed over details in order to present their cases. As always, it is very tempting to find someone to blame but the reality of this situation is more complex than that.

I would recommend reading both articles, not just the one you expect to agree with, and see what you think. This didn’t seem like much of an opinion and so I hadn’t been planning to write about the articles.

However, I’m also seeing a lot of misinformation when it comes to discussing these articles, where it is clear that people have not understood or have forgotten the basic details of the crashes, both in terms of the pilots’ actions and in terms of Boeing’s 737 modifications for the MAX. I’m grateful for this opportunity to revisit the basics as a part of the NTSB’s report.

First of all, you can find all of the information released by the investigating bodies so far in the preliminary reports of the crashes.

Lion Air flight 610 crashed shortly after take-off on the 29th of October 2018, killing all passengers and crew. The preliminary report by the Komite Nasional Keselamatan Transportasi of the Republic of Indonesia is available here: https://reports.aviation-safety.net/2018/20181029-0_B38M_PK-LQP_PRELIMINARY.pdf.

Ethiopian Airlines flight 302 crashed shortly after take off on the 10th of March, 2019, killing all passengers and crew. The preliminary report by the Ministry of Transport Aircraft Accident Investigation Bureau of the Federal Democratic Republic of Ethiopia is available here: http://www.ecaa.gov.et/Home/wp-content/uploads/2019/07/Preliminary-Report-B737-800MAX-ET-AVJ.pdf.

The NTSB is participating in both investigations as the Boeing 737 Max is designed and manufactured in the United States.

It is well worth the time to read both reports for all the details but for the moment, I just want focus on the common ground of both accidents and how that relates to MCAS [Maneuvering Characteristics Augmentation System].

Lion Air flight 610 is particularly useful in this regard as the aircraft showed the same fault in the previous flight before the crash, so we have an additional chance to look at the symptoms. In both instances, the digital flight recorder recorded a difference between the left and right AOA (angle of attack) sensors of about 20° throughout the flight. In both cases, as the aircraft took off, the left control column stick shaker activated (that is, the captain’s side). The left side indicated airspeed (IAS) and altitude (ALT) values were lower than those on the right side, leading to IAS DISAGREE and ALT DISAGREE alerts.

On the first flight, when the flight crew retracted the flaps, a ten-second nose-down stabiliser trim input occurred. The crew countered it with a nose-up electric trim input. After the crew had repeatedly countered automatic nose-down stabiliser trim inputs with pilot-commanded nose-up electric inputs, the captain moved the stabiliser trim cutout switch to CUTOUT. When he returned the switch to normal, the problem recurred. He moved the switches back to cut out and they used manual trim until the end of the flight, at which point the captain reported the IAS DISAGREE and ALT DISAGREE alerts.

The following flight, with a different flight crew, had the same ten-second nose-down stabiliser trim input after retracting the flaps. The crew responded by using the stabiliser trim switches to apply nose-up electric trim. After the second automatic nose-down stabiliser trim input, the crew applied nose-up electric trim and extended the flaps. The flaps were retracted again and an automatic nose-down stabiliser trim input occurred. The crew countered with a nose-up electric trim. Over the next six minutes, over twenty automatic nose down stabiliser trim inputs occured, with the crew countering each input with nose-up electric trim. The final few nose down stabiliser trim inputs were such that the crew were unable to counter them. They lost control of the aircraft which crashed.

A few months later, Ethiopian Airlines flight 302 departed Addis Ababa Bose International Airport. As the aircraft took off, the left AOA sensor data increased rapidly, leading it to be 59° higher than the right AOA sensor. The left control column stick shaker activated. The left indicated airspeed and altitude values were all lower than the corresponding values on the right side, again leading to IAS DISAGREE and ALT DISAGREE alerts just as with the Lion Air instances. A MASTER CAUTION alert lit up. The captain, as Pilot Flying, did not use the auto-pilot but remained in manual flight.

After the flaps retracted, a nine-second automatic nose-down stabiliser trim input occurred. The captain partially countered the input by applying nose-up electric trim. About five seconds later, a second nose-down stabiliser input occurred. The captain fully countered the input with nose-up electric trim but the aircraft was not returned to a fully trimmed state. The flight crew switched the STAB TRIM CUTOUT switches to CUTOUT. However, the aircraft was still out of trim in a nose-down position, which required the flight crew to continue applying nose-up force on the control column in order to maintain level flight. Two electric nose-up trim inputs by the pilots were recorded as the STAB TRIM CUTOUT switches being set back to NORMAL. Five seconds later, another automatic nose-down stabiliser trim input occurred and the aircraft began pitching nose down as the flight crew lost control.

The NTSB report starts with a recap of the flights and then introduces the aircraft.

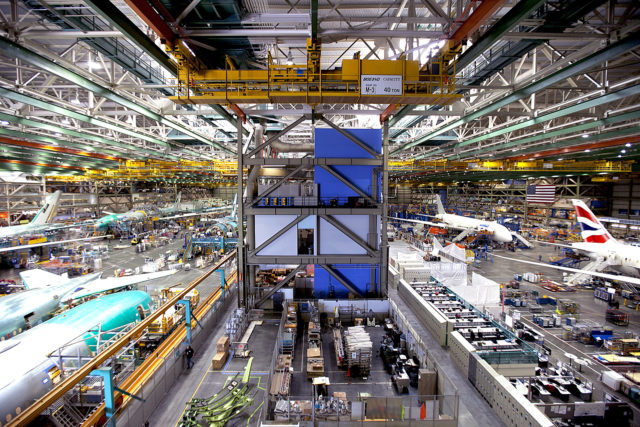

The 737 MAX 8 is a derivative of the 737-800 Next Generation (NG) model and is part of the 737 MAX family (737 MAX 7, 8, and 9). The 737 MAX incorporated the CFM LEAP-1B engine, which has a larger fan diameter and redesigned engine nacelle compared to engines installed on the 737 NG family. During the preliminary design stage of the 737 MAX, Boeing testing and analysis revealed that the addition of the LEAP-1B engine and associated nacelle changes produced an ANU pitching moment when the airplane was operating at high AOA and mid Mach numbers.

The point here is that the heavier engine and larger engine nacelles (which hold the engine) caused the aircraft, in certain circumstances, to pitch up. Boeing responded by making some design changes to the aircraft along with the now famous MCAS stability augmentation function. MCAS was added as an extension of the Boeing speed trim system which would improve the aircraft’s handling characteristics and decrease this tendency to pitch nose-up at high angles of attack.

At some point, the MCAS function was expanded to work at all speeds rather than just the original “mid-Mach numbers” where the problem occurred.

Now, one of the circumstances in which the MCAS would kick in is as follows:

- the aircraft was in manual flight (autopilot not on)

- flaps fully retracted

- aircraft’s AOA value (as measured by either AOA sensor) exceeded a specific threshold

The MCAS would respond by moving the stabiliser trim nose down. Then, once the AOA value fell to below the threshold, it would move the stabiliser nose-up to the original position.

If the flight crew wished to stop or reverse the inputs, they could do so by using their stabiliser trim switches. However, if the high AOA condition persisted (that is, an AOA sensor continued or again exceeded the threshold), MCAS would again command the stabiliser nose-down trim input after five seconds.

This is, of course, exactly the behaviour that was demonstrated on both flights, with one key issue: An AOA sensor was faulty and nevertheless MCAS kicked in.

The NTSB quote Title 14 Code of Federal Regulations Part 25, in which the FAA requires that control systems, as well as stability augmentation and automatic and power-operated systems, must alert the crew in specific ways:

- Provide the flightcrew with the information needed to:

- Identify non-normal operation or airplane system conditions, and

- Determine the appropriate actions, if any

- Be readily and easily detectable and intelligible by the flightcrew under all foreseeable operating conditions, including conditions where multiple alerts are provided.

This means that if conditions are not normal in some way, then it is up to the aircraft manufacturer to ensure that the flight crew have information about the problem and know what to do about it.

This isn’t just about warnings in the cockpit, where we have already learned that too many lights flashing and alarms sounding do more harm than good. Making sure that the crew know the current conditions and how they should react is also about training and standard procedures and checklists.

Now Boeing did conduct a safety analysis for stabiliser trim control as it pertained to MCAS, which included a summary of hazard assessment findings. As a part of that, they identified two hazards associated with uncommanded MCAS, which they classified as major, associated with uncommanded MCAS. In this context, “major” means that there is a remote possibility of this hazard occurring and that it could result in reduced control capability or increased crew workload. The next two steps up are hazardous and catastrophic.

Uncommanded MCAS was only considered to be “major” because Boeing believed that pilots would easily recognise the uncommanded stabiliser trim inputs and that they could return to steady level flight using the control column or the stabiliser trim. If they had continuous nose-down stabiliser trim inputs, they would (said Boeing) recognise it as a a stabiliser trim or runaway failure and follow the procedure for stabiliser runaway.

The procedure in case of a runaway stabiliser is to hold the control column firmly, disengage the autopilot and autothrottles, set STAB TRIM CUTOUT to CUTOUT and trim the aircraft manually.

As a part of Boeing’s safety analysis, they conducted assessments of pilots responding to the hazard in a flight simulator.

From the NTSB report:

To perform these simulator tests, Boeing induced a stabiliser trim input that would simulate the stabiliser moving at a rate and duration consistent with the MCAS function. Using this method to induce the hazard resulted in the following: motion of the stabiliser trim wheel, increased column forces, and indication that the airplane was moving nose down. Boeing indicated to the NTSB that this evaluation was focused on the pilot response to uncommanded MCAS operation, regardless of underlying cause. Thus, the specific failure modes that could lead to uncommanded MCAS activation (such as an erroneous high AOA input to the MCAS) were not simulated as part of these functional hazard assessment validation tests. As a result, additional flight deck effects (such as IAS DISAGREE and ALT DISAGREE alerts and stick shaker activation) resulting from the same underlying failure (for example, erroneous AOA) were not simulated and were not in the stabiliser trim safety assessment report reviewed by the NTSB.

That is to say, Boeing never envisioned that one of the AOA sensors feeding bad data could lead to MCAS repeatedly commanding nose-down stabiliser inputs. The hazards that they tested didn’t show the same symptoms (IAS and ALT DISAGREE alerts, stick shaker activation).

Instead, the assessments in the simulation were based on the following:

- Uncommanded system inputs are readily recognizable and can be counteracted by overriding the failure by movement of the flight controls “in the normal sense” by the flight crew and do not require specific procedures.

- Action to counter the failure shall not require exceptional piloting skill or strength.

- The pilot will take immediate action to reduce or eliminate increased control forces by re-trimming or changing configuration or flight conditions.

- Trained flight crew memory procedures shall be followed to address and eliminate or mitigate the failure.

The NTSB report challenges these assumptions, especially the idea that pilots would easily recognise the symptoms as that of a runaway trim. The symptoms that they tested may well have been quickly recognised by the pilots who were assessed. But those symptoms did not match those experienced by the flight crews of Lion Air and Ethiopian Airlines. The three flights which have been analysed all had the same root cause: the erroneous AOA sensor data on the left side caused the MCAS to activate and command a nose-down stabiliser trim output. All three flights had IAS and ALT disagree alerts. And all three had uncommanded nose-down inputs on the stabiliser trim.

Multiple alerts and indications can increase pilots’ workload, and the combination of the alerts and indications did not trigger the accident pilots to immediately perform the runaway stabiliser procedure during the initial automatic AND stabiliser trim input. In all three flights, the pilot responses differed and did not match the assumptions of pilot responses to unintended MCAS operation on which Boeing based its hazard classifications within the safety assessment and that the FAA approved and used to ensure the design safely accommodates failures. Although a number of factors, including system design, training, operation, and the pilots’ previous experiences, can affect a human’s ability to recognize and take immediate, appropriate corrective actions for failure conditions, industry experts generally recognize that an aircraft system should be designed such that the consequences of any human error are limited.

Boeing’s assumption that it was easy to tell what had gone wrong and that it was easy to follow the procedure to fix the problem was, at its core, wrong.

NTSB goes on to say that the FAA certification guidance allows manufacturers to assume that pilots will respond to failure conditions appropriately but that this is based on the manufacturers showing that systems, controls, monitoring and warnings are designed to minimize crew errors. Although Boeing considered the possibility of uncommanded MCAS operation, it didn’t evaluate all of the potential alerts and indications that could accompany that situation. As a result, their safety analysis and pilot assessment never actually tested the actual situation that is documented, not once but three times, in which it is clear that it was not obvious to the flight crew what had gone wrong.

The NTSB is concerned that, if manufacturers assume correct pilot response without comprehensively examining all possible flight deck alerts and indications that may occur for system and component failures that contribute to a given hazard, the hazard classification and resulting system design (including alerts and indications), procedural, and/or training mitigations may not adequately consider and account for the potential for pilots to take actions that are inconsistent with manufacturer assumptions.

There’s a lot of good stuff here, including a parallel to Air France flight 447 in 2009.

The main point is that when there is a failure in a complex system, each interfacing system presents an alert or indication of how the failure affects it. In these three cases, the crews saw multiple alerts on the flight deck but did not have the tools to identify what this particular combination might mean or what they needed to do about it. Even the flight crew that successfully continued their flight and landed did not actually know or understand what the issue was.

After the first crash, the FAA issued an emergency Airworthiness Directive to revise the Boeing 737 MAX airplane flight manual (AFM). The revision expanded the procedure for a runaway stabiliser procedure to include the effects and indications of erroneous AOA input in order to help pilots recognise the situation. It then emphasised that under these conditions the flight crew needed to perform the existing AFM runaway stabiliser procedure, rather than respond to the other alerts, and specifically that the pilot should set the STAB TRIM CUTOUT switch to CUTOUT and leave it there for the remainder of the flight.

This is what the NTSB are arguing that Boeing did not provide. There should have been an analysis of all the alerts and indications so that the pilot’s response to the situation could be better understood. And more importantly, the pilots needed to be given clear guidance to recognise this particular combination of events to that they could respond correctly.

The NTSB is calling for additional studies on system interactions in order to re-design the flight deck interface, specifically so that pilots’ attention can be directed to the highest priority action. They also somewhat quietly point out that the FAA had come to the same conclusion in 1996.

According to FAA research, “in some airplanes, the complexity and variety of ancillary warnings and alerts associated with major system failures can make it difficult for the flightcrew to discern the primary failure.” The researchers noted that better system failure diagnostic tools are needed to resolve this issue.

The resulting recommendations are all to the Federal Aviation Administration. The first one is aimed at Boeing specifically.

Require that Boeing (1) ensure that system safety assessments for the 737 MAX in which it assumed immediate and appropriate pilot corrective actions in response to uncommanded flight control inputs, from systems such as the Maneuvering Characteristics Augmentation System, consider the effect of all possible flight deck alerts and indications on pilot recognition and response; and (2) incorporate design enhancements (including flight deck alerts and indications), pilot procedures, and/or training requirements, where needed, to minimize the potential for and safety impact of pilot actions that are inconsistent with manufacturer assumptions. (A-19-10)

However, the questionable assumption that pilots will recognise the core issue and deal with it, when multiple alerts are sounding, is an industry issue, not just a Boeing issue.

Require that for all other US type-certificated transport-category airplanes, manufacturers

1. ensure that system safety assessments for which they assumed immediate and appropriate pilot corrective actions in response to uncommanded flight control inputs consider the effect of all possible flight deck alerts and indications on pilot recognition and response; and

2. incorporate design enhancements (including flight deck alerts and indications), pilot procedures, and/or training requirements, where needed, to minimize the potential for and safety impact of pilot actions that are inconsistent with manufacturer assumptions.

(A-19-11)

The point being made here is that FAA guidance allows these assumptions to be made when it comes to certification analyses. Instead, they should be providing clear direction about what kinds of assumptions should be made.

Presuming that the pilots will know what to do even if you never gave them a clear indication is not an option.

Notify other international regulators that certify transport-category airplane type designs (for example, the European Union Aviation Safety Agency, Transport Canada, the National Civil Aviation Agency-Brazil, the Civil Aviation Administration of China, and the Russian Federal Air Transport Agency) of Recommendation A-19-11 and encourage them to evaluate its relevance to their processes and address any changes, if applicable. (A-19-12)

Two further recommendations are that the FAA develop robust tools and methods for validating assumptions about pilots’ recognition and response to failure conditions and to use those tools and methods to revise FAA regulations and guidelines.

They also recommend the development of new designs for aircraft system diagnostic tools which improve the prioritization and clarity of failure indications, using the input of industry and human factors experts.

And finally, once those tools and standards exist, the NTSB recommends that they are implemented in all transport-category aircraft, supporting the flight crew’s ability to respond quickly and decisively in cases like this, where individual systems all have their own indications.

As I said at the start, the situation is complicated and there are no simple answers. Could the pilots have done better? Yes. Should Boeing have assumed that they should respond to the stick shaker combined with the IAS and ALT DISAGREE lights and the nose pitching down by moving the STAB TRIM CUTOFF switch to CUTOFF? No.

There’s a very old joke that the most common final last words spoken onto the Cockpit Voice Recorder is the captain complaining “Now what the hell is it doing?” in reference to the aircraft systems.

Now it’s clear that this is a side effect of automation and our understanding of it, but I honestly feel that no amount of stick and rudder training can fix the problem of too many alarms and alerts in a critical situation. Time and time again, we’ve shown that multiple indications will overload pilots, causing confusion and fixation as the flight crew, knowing they may have only seconds to make the right decision, try to work out which issue to respond to first.

This report by the NTSB appears to finally be attempting to look at the bigger picture, rather than focus any individual part of a complex system. If you’d like to read the whole thing, you can download it here: Safety Recommendation Report

All said and done, I think that Sylvia covered all angles admirably.

No, I did not (yet) read the actual reports that she gave us the links of, but even without going through all that it is evident that she made an excellent summary. And yes, I also have a copy of “Stick and rudder”, one of the best books ever written on the subject. But this book deals exclusively with BASIC air(wo)manship and was written long before electronics became an integral part of flying, long before anyone even had heard of a “glass cockpit”.

To train a pilot from scratch is a long and expensive process. When I started my career, getting a job was like winning the jackpot in the Lotto. But recently there has been an increasing demand for pilots – how the demise of Thomas Cook and Ari Berlin and other operators (yes even the grounding of the 737 Max has had a very negative effect on the demand for pilots) will affect the job market is another question. But in the recent past the job opportunities have grown exponentially. To be able to fill the seats in the front office, the cockpit, there had to be a limit to the amount of training. The necessity to ensure that pilots would be able to handle all those beautiful new gizmos made it a given that something had to be sacrificed. And that, in many if not most cases, may well have been attention to the basics of, yes, “Stick and Rudder”. And anyway, who would need all that when the aircraft would be equipped with a multitude of electronics that as if by magic automatically would compensate for the reduction of basic flying skills?

To a certain extent, this was indeed the case. But now we come to the, shall we say “interaction” between the demands of getting a new aircraft model on-line, pilots licenced to operate them, and the demands of hard-nosed business.

To me it still would seem that Boeing cut a few corners. The “MAX” was not designed from scratch as a new type. Instead, Boeing elected to incorporate modifications in order to be able to continue the “737” designation. To an extent this makes sense. No doubt, it shares major structural components and crew training in many cases could be reduced to cover a transition, rather than a new type rating. The same probably applied to qualified maintenance staff. The cost reduction must have been tremendous. But though maintaining the “737” type concept, it still required the incorporation of improvements and developments. Some significant, like more powerful engines with a much larger diameter, requiring the engine pods to be positioned more forward. This threw the balance, the behaviour of the resulting new variant out of kilter. MCAS was the solution: the addition of yet another gizmo that would solve it. Only, it didn’t. Add the complacency of the authorities and their cozy arrangement with Boeing and the crashes were the inevitable result.

I was intrigued that the NTSB report doesn’t go into the type certification and training issues at all, but in the end, I think that they aren’t directly related to the cause but only tangentially. I suspect it was politically wise for the NTSB to stick to the clear aviation factors and not try to tie the crashes to more esoteric economical and political considerations.

Boeing knowingly ignored a very potentially catastrophic situation and didn’t ground the planes because the shareholders wouldn’t have liked that. I hope the victims’ families launch a class action lawsuit and hit Boeing very hard where it hurts the most, in the wallet. Of course they are ‘too big to fail’ so there will be no real threat to their ability do do business, a few middle managers may do time, and then we get to see if anything actually changes.

There’s a lot of what happened with regards to the FAA and Boeing which are really outside of the NTSB’s remit, so doesn’t really come through in t his report.

To say that I’ve followed this situation with interest is a major understatement. The Max 8 is at the center of a critical issue, with regard to air safety and with regard to humankind’s relationship to automation.

The NY Times article, referenced above, makes the case that these crashes were due to pilots whose were lacking in basic airmanship, then the argument goes in favor of more automation instead of demanding greater stick and rudder skills from pilots.

Complexity is the issue, at least IMHO. These pilots had a simple problem, basically a runaway trim which could be instantly remedied by throwing two switches, but they responded inappropriately to this problem as events compounded into an unrecoverable situation. Mentally placing myself in their situation, it’s easy to understand why this would happen. An unexplained flight control function was causing the problem, but they would have had no idea of how, or why. This was happening. In the meantime, a stick shaker and other warnings were diverting their attention and compounding a relatively minor problem into an overwhelming situation. From the comfort of an armchair, it’s easy to criticize their actions, but my armchair isn’t bombarding me with warnings; the airplane was monopolizing their attention.

So, is the answer even more automation? I would suggest that automation is causing many of these problems. It’s one thing for an automated system to assist, but quite another when an automated system fights the pilot for control of the airplane. We’ve seen too many examples already. Toulouse, QF 72 where a Quantas Airbus made an uncommanded control change which severely injured a flight attendant, injured a number of passengers and gave the captain PTSD and now these 737 Max 8 tragedies. It’s not simply a Boeing problem or an Airbus problem, it’s a problem with the entire design philosophy of the industry.

My own flying has been modest; puddlejumpers and my most adventurous foray was a Citabria 150 HP. Nonetheless, I always believed that I was responsible for my aircraft and my instructor, one Ken Larson, made it very clear to me that autopilots, etc. were not to be engaged until I was at a safe altitude with plenty of time to recover if the autopilot decided to do something untoward. I have carried that with me ever since and, while I have never claimed to be flawless, I have always taken responsibility for the operation of any aircraft I was flying as PIC.

Beyond flying, I am also a licensed mechanic and have had the opportunity to work on aircraft and to actually see some of the systems i had relied upon. That’s a real advantage for a pilot to be able to visualize how a system actually operates.

OTOH, when systems are controlled by a flight control computer, comprehension of the interaction of these systems has to be programmed into the software. QF72 was a perfect example of that system,apparently, breaking down as two separate (automated) control corrections were summed, causing an abrupt control change.

Computers are fine; I administrate computer networks daily. But when it comes to controlling an aircraft, I’ll be my own Central Processing Unit, thank you very much.

I think the point of the NTSB report is that more or less automation isn’t the issue, it’s the interface which now needs an overhaul. As you say, the airplane was monopolising their attention on the basis that all information is equal. But we know that’s not true and simply throwing alerts at the pilot in hopes that they can quickly solve the puzzle is neither efficient nor intelligent design.

That said, the instances in which an automated system has “fought” the pilot for control (that’s kind of a loaded term but I do see what you mean) are very rare. GA pilots like us rely on our own flying skills and maybe an autopilot, but we are also far, far more likey to crash. Taking responsibilitiy for the autopilot and taking responsibility for the flight deck are the same thing, it’s just a question of level of experience and difficulty. The days when you could fly a passenger airliner carrying hundreds of people safely to a destination using only your own brain were also much more dangerous days to fly in.

I’m not saying I know what the answer is, just that I strongly feel that dismissing an avianics corporation as incompetent or wishing airliners went back to cables and pulleys without automation doesn’t seem to me like it will improve aviation safety; quite the opposite.

What I find troubling, whether in my car stereo or an airplane, is controls that don’t work as expected. For essential operations, a control should have one purpose, no matter what. For example, the on off switch on my car stereo will switch input sources (radio, USB, auxiliary (sat radio) if you hit it briefly but shut off the radio if depressed for several seconds. While this is hardly critical, in my mind it stands as a poor design. If the phone rings, I want that radio off instantly.

Now, apply that to a transport aircraft. If the trim system changes mode of operation with no conscious command from the flight crew, I would see that as a poor design, but the stakes are infinitely higher with the aircraft. Responding to runaway trim is nothing new. It’s taught to pilots of any aircraft with electric trim. Usually just pop a breaker or throw a switch. Narrow body Boeings in the cable and pulley days had a trim brake. If the trim ran-away in one direction, a sharp reaction from the yoke in the opposite direction would stop the trim long enough to deactivate the system. I used to work on these devices and know them to be simple, intuitive.

However, a flight control computer commanding trim changes is more than a runaway trim situation and I can understand how a pilot could become confused. I would consider this an automated system fighting the pilot for control. Because of a faulty AOA sensor, the FCC “assumed” that there was a problem and contradicted the pilot’s input. Assumed is, of course, the wrong word. Computers are not conscious and make decisions based upon whatever data they receive. These two crashes are a perfect example of how quickly things can go wrong, in this case with the failure of one AOA sensor.

I agree that automation has increased safety and we are, indeed, safer than we were a generation before. However, when a Flight Control Computer has the ability to override the PIC, a huge moral dilemma arises.

FAR Sec. 91.3 — Responsibility and authority of the pilot in command.

(a) The pilot in command of an aircraft is directly responsible for, and is the final authority as to, the operation of that aircraft.

(b) In an in-flight emergency requiring immediate action, the pilot in command may deviate from any rule of this part to the extent required to meet that emergency.

(c) Each pilot in command who deviates from a rule under paragraph (b) of this section shall, upon the request of the Administrator, send a written report of that deviation to the Administrator.

That’s as close to a stone tablet as it gets, in aviation. I may not have served as PIC in an Air Transport aircraft, but I take that very seriously. The autopilot maker isn’t responsible for the operation of my aircraft, I am. Our human senses are far more sophisticated than even the most powerful supercomputers. Our brains make these supercomputers look like toys. Yes, we can be overloaded, fatigued and we can even make mistakes, but the same is true for the people making the programming decisions that go into these flight control computers. No computer, no matter how sophisticated, can even begin to approach consciousness or actual situational awareness. It’s very obvious that as the MCAS system forced these aircraft into a downward trim condition and was unable to reason that the ground was getting closer.

I agree with much of what Langweisch wrote. A greater level of basic airmanship may well have saved the day. I would like to think that I would have had the presence of mind to shut off the trim system and regain control of the aircraft, but that’s easy to say from a Lay-Z-Boy, without alarms and stick shakers demanding my attention. Nonetheless, the handful of inflight emergencies I have dealt with were survived by relying upon the basics and, in no small part, what I learned from Langweisch’s father. While I agree with much of The NY Times article, at the end he loses my agreement: “Boeing is aware of the decline, but until now — even after these two accidents — it has been reluctant to break with its traditional pilot-centric views. That needs to change, and someday it probably will; in the end Boeing will have no choice but to swallow its pride and follow the Airbus lead.” WTH?!?!?! He makes the case for greater airmanship, but then argues that the real answer is more automation? This makes no sense to me, whatsoever.

The problem, as I see it, is that these systems, as good as they are, can overload the senses of any human, no matter how skilled. In the book No Man’s Land, about the QF 72 incident, a lot of time is spent going through checklists the FCC popped up. The aircraft had experienced two uncommanded pitch-down maneuvers and there were seriously injured people onboard. That plane needed to get on the ground post haste and, as long as the plane was in a condition to land safely, that should have been priority #1. Had another uncommanded pitch-down event happened, the tale could have had an even less happy ending. (As it was, lives were changed forever.)

To me, it still comes down to responsibility and the requirements of FAR 91.3. The pilot is the first at the scene of every crash, and that is as it should be. If the programming team inhibits the pilot from fulfilling their responsibilities as PIC, they are in no direct peril. I don’t have any complete answer, but I don’t believe that a civilian aircraft should be so complex to fly that a human can no longer operate it unassisted. (Military aircraft assume a different level of risk.)

I refer to TWA 841, a 727 which went into an uncommanded roll to the right and entered a steep dive during which it likely broke the sound barrier. To regain control, the pilot extended the gear, something a flight control computer would never allow. While there was baseless speculation regarding disabled slats and 2 degrees of flaps for some speed advantage as being the cause of the incident, the most likely explanation was a minor failure of the right outboard aileron which causes the autopilot to roll left, followed by an inadvertent hard-over of the lower rudder, probably caused by a yaw damper malfunction. Had the pilot not been an excellent airman (he did aerobatics on the side) and had he not been able to override standard procedure by dropping the gear, there would be a monument in some lonely field in Michigan and 89 people would have died needlessly. As it was, there were no serious injuries.

Automation is fine, but the humans have to remain in charge and remain the responsible parties.

Bring back the flight engineer? as a professional trained to diagnose and troubleshoot complex computer systems in real time and under pressure!

I just want to say that next week’s post is already written and got cancelled when I saw the NTSB report — it isn’t intentionally in response to this comment! But a flight engineer does play a part.

IMHO, an excellent idea. We need more brainpower in the cockpit.

In the leadup to the Lion accident, you say the crew on the flight prior reported the IAS agree/disagree alerts. My understanding is that that crew left the aircraft leaving MCAS disengaged and there was nothing to alert the next crew to what the consequences might be of re-engaging it. The first crew were required to leave a comprehensive report on all aspects of what was an emergency situation until they solved it. I don’t believe this was done and this could be seen a grossly – even criminally negligent. I don’t believe the incoming crew had a clue what to expect. Thanks Sylvia for your comprehensive discussion of these tragic events. I was impressed by the Langeweische report and its conclusions. Airmanship first.

That’s something I would have expected the NTSB analysis to get into a bit deeper, but as they are not the investigating body in either case, they are limited to the information made available to them through the preliminary reports. But certainly it seems like the first crew did report the issue in some form and no one really understood what it meant.

We would all love to see what that first crew reported, by what means and to whom. And what was done about it if there was such a report. I guess we never will though. Sadly vested interest has made it all a bit murky.

I eventually managed to watch the entire session from Tuesday’s Capitol Hill event yesterday and was quite impressed with the level of detail which various Senators were very familiar with. Most seem to have established where the problems lie and want to progress to legislation which addresses the issues of FAA regulation and oversight. I was particularly impressed by Senators Rosen, Tester**, Cruz & Cantwell (who especially focused on the human-machine interface problems of now and the future).

One other important aspect which pleased me was to hear one Senator (I forget which) state that Boeing is far to important a company both for jobs and the US economy for it’s management to falter (for whatever reason) and damage these things. I was getting fed up with the “boot being on the other foot” : i.e Boeing telling the government how important they are as a company. I like that change in emphasis. We’ve seen too many very large companies here in the UK fail (Carillion, Thomas Cook, etc) because of a lack of oversight particularly by financial services regulators, auditors and the like (what WERE they doing?). I hope the US manages to reign in some of the more avaricious businessmen and companies before the same fate befalls a company as [formerly] illustrious as Boeing.