Lion Air flight 610: The Final Minutes

Last week, we started the sequence of events that happened inside the cockpit of Lion Air flight 610. The primary resource for this sequence of events is the final report released by the Komite Nasional Keselamatan Transportasi (KNKT). We left off with the cockpit still blaring with alarms and alerts.

The Maneuvering Characteristics Augmentation System (MCAS) had been triggered and was pushing the nose down while the captain, as the pilot flying, interrupting the inputs and then correcting for them. He was also getting both overspeed and underspeed alerts, both of which needed immediate but opposite actions.

For the MCAS to activate, three things must be true:

- the aircraft’s AOA value (as measured by the AOA sensor) must exceed a specific threshold,

- the aircraft must be in manual flight (autopilot not engaged),

- the flaps must be fully retracted.

The captain did not know about MCAS or that a misaligned AOA sensor had been installed onto the aircraft. He had asked the first officer to perform the Airspeed Unreliable checklist and to ask for clearance to a holding point in order to buy time to troubleshoot and he had spotted one important point: when the flaps were extended, the constant nose-down adjustments stopped.

As a result, he had extended the flaps fully as he tried to work out which, if any, of the instruments could be trusted. He did not explain any of this to the first officer who appeared to be struggling with all but the most simple tasks.

The aircraft was climbing at a rate of 1,500 feet per minute with a pitch attitude of 3° nose up as the flaps position reached 5. On the captain’s display, the low-speed barber pole and the overspeed barber pole merged into one. The control column stick shaker activated again, warning of an impending stall, and continued to vibrate.

At the same time, the overspeed barber pole appeared on the first officer’s flight display, with the bottom of the pole at about 340 knots. The low-speed barber pole did not appear. Neither pilot spoke to the other about what they were seeing on their respective flight displays.

The captain shouted “memory item, memory item” but didn’t say which list, or why.

The automatic trim activated nose down again for one second and then again. The flaps were fully extended, so this was not the MCAS but likely a manual input, perhaps to reduce the climb in case the aircraft really was entering a stall.

The first officer responded with the memory items he had completed. “Feel differential already done, auto break, engine start switches off, what’s the memory item here?”

Rather unhelpfully, the captain snapped a single word response. “Check!”

The automatic trim activated nose down for two bouts of two seconds, making for four slight nose-down movements in seven seconds. Then it activated nose down again twice more as the aircraft stopped the left turn, rolling level on a heading of 100°.

Three minutes had elapsed since the aircraft’s departure. Throughout the flight, the aircraft had been reacting in ways mostly incomprehensible to both flight crew. The core issue was the faulty sensor but the AOA DISAGREE warning had not illuminated: unknown to the crew, this alert was not functional on the aircraft. They were left to diagnose a dizzying array of symptoms on their own to a backdrop of alarms and alerts. At the time, no one had heard of MCAS or that the 737-MAX would automatically trim nose-down at higher angles of attack in ways that had not been seen in earlier models. The captain of the previous flight had worked out that the STAB TRIM CUT-OUT disconnected the MCAS from trimming nose-down in response to the faulty AOA sensor. This captain, 31 years old with 6,028 hours (mostly on the Boeing 737) had noticed that retracting the flaps had added to the problem but overall, he struggled to make sense of the situation. He called out for support but inconsistently and often incoherently: he didn’t seem to be able to work with his less experienced first officer for effective trouble-shooting. The repeated sounding of alarms and constant clacking of the stick shaker only added to the stress in the cockpit.

The first officer asked “Flight control?”

The captain’s voice, “yeah,” was followed by the sound of paper pages being turned as the captain trimmed the aircraft nose up for one second.

The first officer called out “Flight control low pressure” just before another warning sounded in the cockpit: an altitude alert tone. They had set the desired altitude to 5,000 feet but the actual altitude had deviated from this. The captain’s altitude showed 4,110 feet and the first officer’s showed at 4,360 feet.

The automatic trim activated nose down for one second. The aircraft began to turn to the left. The captain trimmed nose-up for one second. The first officer called out “feel differential pressure”. The captain responded that he should perform the checklist for Airspeed Unreliable, which the first officer acknowledged. The aircraft continued to climb at a rate of 1,600 feet/minute, with the captain’s altimeter showing 4,900 feet and the first officer’s altimeter showing 5,200 feet.

The first officer informed the captain that he was unable to find the Airspeed Unreliable checklist in the manual.

Another altitude alert tone sounded: they were continuing to climb at a rate of 460 feet per minute and the captain’s display showed 5,310 feet and the first officer’s 5,570 feet. The sound of pages turning filled the cockpit again.

Meanwhile, the air traffic controller added a tag to the aircraft’s radar display to say FLIGHT CONT TROB so that anyone looking at the radar target would be aware that the aircraft had reported flight control trouble. This shows how quickly everything was happening; writing this from the comfort of my own desk, it seems like a very long time since the first officer reported their issue to the controller, but in reality only one minute had passed.

The flight crew seemed close to being able to gain control of the situation. Then the flaps started retracting again from position 5 to position 1.

There was no discussion related to the flap position. There is no way of knowing who retracted the flaps. However, as the captain had extended the flaps without a word of explanation, it seems likely that the first officer took it upon himself to retract them.

The first officer repeated the controller’s instruction to fly heading 350° for the captain’s benefit and complained that there was no Airspeed Unreliable checklist.

The flaps began to travel from position 1 to fully retracted, again with no comment or discussion.

The conditions for the MCAS to automatically adjust the pitch nose down in response to the faulty AOA data were about to be fulfilled again.

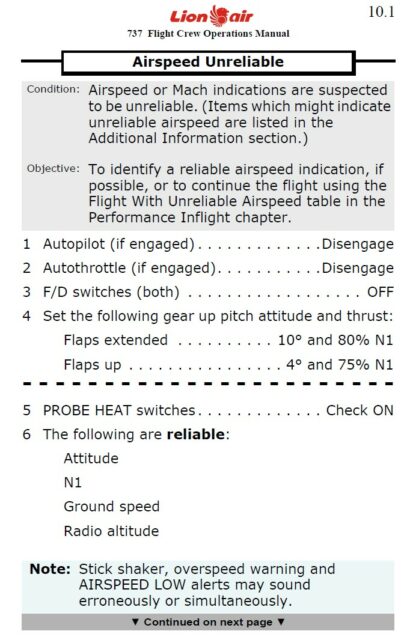

While the flaps were still retracting, the first officer said “ten point one.” In the Quick Reference Handbook, 10.1 is the page that the Airspeed Unreliable checklist is on. He’d found it.

The first item on the checklist is to disengage the autopilot, if engaged. It was not engaged but this would have emphasised that the crew should not use the autopilot in an attempt to deal with the issues they were facing.

As he began reading out the Airspeed Unreliable checklist, the MCAS activated the automatic trim nose-down for two seconds. The captain interrupted it by commanding nose up trim for 6 seconds. The pitch trim recorded 6.19 units.

Because the configuration of the aircraft will define what “in trim” means, those units can’t be used to understand if the aircraft is pitching up or down but it does allow us to have a relative understanding of how the trim changed during the flight. The units of pitch trim are constantly recorded by the FDR: I won’t list every pitch trim change, but it is useful to look at the units at key points, so we can get a feeling for how pitch trim, and thus the pitch, were changing during the flight.

A few seconds later, the aircraft trimmed nose-down again for seven seconds. The captain interrupted it again, commanding nose-up trim for six seconds. MCAS activated the trim to pitch nose down again for four seconds and the captain commanded nose up for four seconds. The aircraft trimmed nose down for three seconds and the pilot commanded nose-up trim for three seconds. The pitch trim was now 5.0 units.

The aircraft was still turning left and they blew straight past the 350° heading given by the controller. As they reached a heading of 015°, the controller called to tell them they needed to turn right for a heading of 050° and maintain 5,000 feet. The first officer, who was still reading aloud from the Airspeed Unreliable checklist, stopped to repeat the controller’s instructions.

MCAS activated the automatic trim nose-down for three seconds and the captain commanded nose-up for six seconds. The automatic trim activated nose-down for five seconds, interrupted again by the captain trimming nose-up for six seconds. At the same time, the controller instructed the flight crew, who were on a heading of 023°, to turn right, heading 070°, to avoid traffic.

The flight crew had not declared an emergency, so the controller had no way of knowing that in the chaos of the cockpit, the constant heading changes were adding to an already impossible workload.

The first officer, who up until now had been handling radio communication in his role as Pilot Monitoring, did not respond to the controller’s heading change nor did he acknowledge the traffic. He was focused on the Airspeed Unreliable checklist, reading aloud for the benefit of the captain that the flight path vector and the pitch limit indicator might be unreliable. The controller called twice more, finally receiving an acknowledgement from the first officer.

Someone commanded nose-up trim for seven seconds, almost certainly the captain.

MCAS activated again, trimming nose-down for about five seconds until it was interrupted by a nose-up trim for five seconds. The first officer continued to read aloud from the Airspeed Unreliable information until he was interrupted by the controller telling them to turn right to heading 090°, which the first officer acknowledged. At that point, the aircraft was on a heading of 038°.

The controller almost immediately called back to ask them to stop the turn and fly heading 070°, which the first officer acknowledged.

The captain commanded nose-up trim for five seconds. The MCAS activated again with five seconds of nose-down trim, which was interrupted by the captain again trimming nose up for six seconds. The MCAS trimmed nose down for four seconds and the captain trimmed nose up for four seconds. Nose down for four seconds, nose up for three. At this stage, the trimming nose-up and nose-down has become constant; the captain was entirely focused on fighting the MCAS attempts to bring the nose down.

Finally, the first officer finished reading the Airspeed Unreliable checklist and told the captain that he was going to move on to Performance Inflight. The MCAS trimmed nose-down for seven seconds, which the captain interrupted with seven seconds of nose-up trim. Throughout this, the captain was just about managing to keep the aircraft from descending; the aircraft’s pitch trim varied between 5.30 and 5.83 units.

The first officer called for the senior cabin crew member to come to the cockpit for an update. The controller called with traffic information. The captain trimmed the nose up for one second.

The cabin crew member came into the cockpit where the captain told her to please get the engineer from the cabin. The first officer repeated the instruction and the cabin crew member left.

MCAS trimmed nose-down for one second, the captain trimmed nose-up for three seconds. As soon as he stopped, the MCAS trimmed down for another four seconds and the captain trimmed nose-up for six. Trimming nose-up is the correct way to interrupt the MCAS automatic trimming, but as the AOA sensor was still feeding bad data in to the system, the MCAS was reacting anew to the situation every time the captain stopped adjusting the trim.

The cockpit door opened, presumably the engineer entering the cockpit. The captain said simply, “Look what happened.”

At the same time, the controller instructed the flight crew to turn left heading 050º and maintain 5,000 feet. The first officer acknowledged the call, although the aircraft had never made the turn to 070° and they were already at a heading 045° when the controller made the request for 050°. The aircraft needed to turn right by a further 5° rather than left. The first officer was no longer monitoring and the constant battle for control of the trim distracted the captain from following the controller’s headings.

MCAS activated nose-down trim for four seconds, interrupted by the Captain commanding nose-up for 6 seconds. The cabin crew member used the interphone to share with the others that there was a technical issue in the cockpit.

MCAS activated nose down for six seconds, interrupted by nose-up trim for five seconds. MCAS activated trim adjusted nose down for seven seconds, the captain trimmed nose up for three. The first officer, briefly recollecting that he was the Pilot Monitoring on this flight, called out that the landing gear was up and that they were at an altitude of 5,000 feet.

He had barely stopped speaking when the altitude alert sounded again. The captain’s altimeter showed 4,770 feet while the first officer’s showed 5,220 feet.

The captain trimmed up for six seconds, bringing the pitch trim up to 5.4 units.

As the aircraft’s altitude dropped on the radar screen, the controller asked if they were descending. The first officer repeated that they had a flight control problem and that they were flying the aircraft manually.

The MCAS pitched nose down for four seconds, interrupted by the captain trimming nose-up for seven.

The controller asked the flight crew to maintain heading 050° and to change frequency to arrivals, which the first officer acknowledged. The aircraft was at that stage on heading 059°.

At this point, the flight had been airborne for 9 minutes. It’s probably taken you longer than that to read the sequence of events.

The MCAS pitched nose-down for six seconds countered by the captain commanding nose up for three. Nose-down for six, nose-up for three. The first officer contacted arrivals and advised them that they were experiencing a flight control problem. The pitch trim was 4.5 units.

The arrivals controller told the flight crew to prepare for landing on runway 25 and to fly heading 070°. The first officer read back the instruction. Their current heading was 054°.

The MCAS trimmed nose down for two seconds and the captain trimmed nose up for nine. Nose-down for five, nose-up for three.

This was when the captain asked the first officer, again, to take over as Pilot Flying.

It is somewhat of a controversial decision for which there is no right answer. On the one hand, the captain is the most experienced pilot and thus should maintain the responsibility for the flight for the landing, rather than the potential confusion of changing pilots in the middle of an emergency. On the other hand, relinquishing control would allow the captain a better chance of trouble-shooting the situation, which the first officer was clearly not managing to do. By now, the captain must have felt that it was clear that they could maintain the flight by constantly interrupting and correcting the nose-down trimming.

However, he said none of this. The first officer commanded aircraft nose-up trim for three seconds and called out, “I have control.”

The captain took over communications, contacting arrivals to request that they continue via a specific waypoint, instead of direct, in order to avoid weather. The controller immediately approved this.

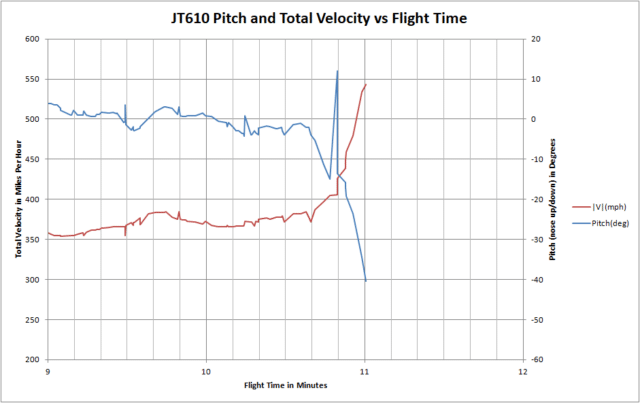

MCAS activated the nose-down trim for eight seconds. The pitch trim, which had varied between 5.8 and 4.5 under the captain’s control, was now at 3.4 units.

The first officer had no idea what was going on or even how to describe it. “Wah! It’s very—” He commanded nose-up trim for one second, bringing the pitch trim to 3.5 units.

The captain was still speaking to the controller, explaining that he could not determine their altitude as all of the aircraft instrustments were indicating different altitudes. The captain was also becoming overloaded: he identified their flight as Lion Air six five zero, instead of 610.

“Lion Air 610, no restriction,” said the controller, by which he probably meant that he was not going to ask them to maintain a specific height.

MCAS activated the nose-down trim for about three seconds until it was interrupted by the first officer, who commanded nose-up trim for one second. The first officer’s column sensor recorded 65 pounds of back pressure, so he was pulling the nose up hard. Unlike the captain, he was not correcting the aircraft pitch using the trim; after interrupting the the MCAS, he was trying to keep the now-out-of-trim aircraft level through sheer brute force. The pitch trim dropped to 2.9 units.

The first officer commanded nose-up trim for another four seconds, bringing the pitch trim up to 3.4 units. He had trimmed the aircraft but not enough, which meant he still needed forcibly pull back on the column to keep the nose up.

The captain asked the arrivals controller to block 3,000 feet above and below them for traffic avoidance, effectively ensuring that they had a buffer as they were struggling to control the aircraft he did not know their true altitude.

Unfortunately, this wasn’t clear to the controller, who responded by asking the captain for their intended altitude.

MCAS activated the nose-down trim for eight seconds. The pitch trim dropped to 1.3 units and the 737 pitched down to -2°. They were descending at 1,920 feet per minute as the first officer’s column registered 82 pounds of back pressure. He pulled back even harder, fighting against the trim, and called out to the captain that the aircraft was flying down.

Fixation is a psychological condition where the pilot is unable to process new information. The pilot’s attention is “fixed” on a single source of data, usually in response to overload, and no longer perceives important data from other sources. Studies have shown that aural information is most commonly lost first. We can see this effect in the captain, who did not react at all to the first officer or make any move to arrest the aircraft’s descent. Instead, he responded to the arrivals controller question instead repeating his previous request. “Five thousand feet.”

As the controller approved the request, the first officer shouted loudly that the aircraft was flying down. The captain finally responded. He said, “It’s OK.”

It was most assuredly not OK.

The first officer commanded nose-up trim for two seconds, setting the pitch trim to 1.3 units. The MCAS activated for four, dropping the pitch trim to 0.3 units. The first officer’s control column recorded 93 pounds of back pressure.

Although the first officer seemed to almost grasp the issue that the 737 MAX was trimmed for descent, he did command any further nose-up trim. By now, the captain’s side altitude showed 3,200 feet and the first officer’s side showed 3,600 feet. They were no longer “flying down”, they were diving at a rate of over 10,000 feet per minute.

The Extended Ground Proximity Warning System sounded urgently. TERRAIN – TERRAIN! SINK RATE! The overspeed clacker sounded; a manual system which could not be fooled by the faulty AOA sensor.

The MCAS commanded one final nose-down trim but this one was interrupted by the Boeing 737 crashing into the water.

The arrivals controller called out to the aircraft and received no response. The controller called out again. The flight had disappeared from the radar screen. The arrivals controller and the Terminal East controller both tried four more times between them to contact the aircraft.

Then, unable to deny that the Lion Air 610 must have crashed, the controller asked any aircraft available to hold over the last known position for a visual search.

About half an hour later, a tug-boat crew found floating debris about 33 nautical miles northwest of Jakarta.

Despite having gone over this repeatedly in the context of the datalogs and the software faults, adding the cockpit activity makes it much more harrowing to read about. You get a real sense of the chaos, and like a slow motion car crash, you know where things are going to end up. My thoughts remain with the pilots, crew and passengers…

Well said.

Sylvia, you wrote that “[t]he core issue was the faulty sensor but the AOA DISAGREE warning had not illuminated: unknown to the crew, this alert was not functional on the aircraft.” Do you think, if the AOA DISAGREE warning had been functional in the aircraft, that it would have given the flight crew the informational nudge needed to break them out of the task fixation and overload they were stuck in? Or would it have been one more warning in a sea of them? It seems to me that, whatever else was happening, the captain and first officer were not doing an effective job communicating what they were thinking. With better CRM, with better communication, it’s not hard to imagine that they might have combined their two incomplete perspectives and figured out what could save their aircraft. From the starting point they were in – incomplete information that wasn’t well communicated between them, an impossible task overload, task fixation, and an aircraft system about which they had no knowledge – it’s hard for me to identify how the sad tale of Lion Air 610 could have ended differently.

I’m not a pilot, but have studied this and other disasters in depth as I design safety critical systems.

To my mind, this crash represents an emerging new class of problems.

Historically you had “random” things such as mechanical/electrical failure, misleading instruments, miscommunication problems, pilot fatigue, etc. These are all largely amenable to “Swiss cheese” modelling, where a number of factors need to align (like holes in a stack of cheese slices). Adding more slices or reducing the number of holes can make a big difference.

You then have critical issues such as design failures, unknown processes such as metal fatigue, unknown loading of dangerous cargo, bombs, etc which can lead to catastrophic failure on their own. These are often simply unsurvivable, and you just have to try an eliminate.

In this case, however, there was a deliberate decision to introduce an “active agent” into the system. It was intended to increase safety by preventing stall, but it was: fed unreliable data, given authority to run the trim right to the hard stops, and had limits of single operation but these cleared after each pilot counter action.

Critically, the pilots weren’t told it was there…

The result was that they were up against a system that was actively countering their recovery action, but were treating it as a passive fault which needed to be worked around. Without that understanding the decks were massively stacked against them.

The drivers for the introduction are relatively standard commercial and management

pressures, but agency through a “ghost in the machine” is relatively new.

Thanks for your comment! I’m fascinated by aviation safety partly because, as a software/data engineer, I’ve both personal and professional interest in how technological systems fail. The thing about this accident which bewilders me from the standpoint of Boeing’s decision-making is not even that Boeing introduced that “ghost in the machine” – it’s that they were apparently blind to the fact that it had a single-point failure mode. I think it’s not intrinsically a bad thing to add an active safety system to an aircraft, even though not telling the pilots about it was a dreadful idea in hindsight. But – and it’s a big “but” – if you’re going to add an active safety system that takes flight control actions based on sensor inputs, that system ABSOLUTELY needs the ability to detect when it’s getting anomalous data inputs. And when it does, it ABSOLUTELY needs to let the humans know “I’m getting anomalous inputs, I don’t know how to interpret them, so the ball is in your court now.”

The MCAS system absolutely needed a way to recognize that it was getting anomalous AOA data from its sensors, and it should not have attempted to take control of the stab trim once it so recognized. Had it been able to light up an “MCAS AOA DISAGREE” fault or similar on the EICAS display, Lion Air 610 in all likelihood wouldn’t have crashed. But that would’ve required Boeing to disclose the existence of MCAS, and therein of course is the rub.

If you ask me, there are some senior decision makers at Boeing that should have gone to jail over this. I know it cost three manufacturer a lot of money in penalties, damages, lost sales, and so forth. But it’s not nearly enough, in my view.

It has all the feel of a system added very late in the day after the nose up problems were discovered in flight testing. Rather than a proper fix, with redundant sensors, voting, alarms etc (which would probably have required simulator time and recertification, not least to learn how to disable the system), they just appear to have tacked on minimal “fix”. Like so may quick patches, this in turn introduced a raft of follow-on problems, this time fatal…

ColinTD is correct that MCAS looks hasty and ill-considered — to be charitable. The problem with Tammy’s suggestion of criminal prosecution is contained in the romance-language version of “Inc.”; it translates as “Society of the Nameless”. A large corporation has plenty of room for bad ideas to build up steam without any one person taking responsibility when things go south, and even for euphemisms and lack of attention to warnings from knowledgeable people to paper over an issue. (There’s an old I-wish-it-were-a-joke about “This is a crock of s**t!” being transformed through several levels into ~”This strong product will buttress the company’s future.” ISTM that this is the sort of mess that whistleblower-protection laws were made to deal with — but that still requires that somebody blow the whistle AND that somebody with independent power hear it.

This is indeed a harrowing account.

A few things stand out:

Apart from the technical failures, there seemed to have been a severe lack of cockpit crew coordination. This was exacerbated by an obvious lack of experience of the F/O. The captain became so engrossed in fighting the aircraft that he failed to properly manage the cockpit. His distribution of the tasks was chaotic, made worse by an increasing lack of communication between the two pilots.

The decision to hand control to the F/O was, in itself, not really a bad one. But he failed to brief the F/O what his actions had been that had until that point been reasonably successful in keeping the aircraft under some sort of control.

It is easy to write this from the comfort of my desk chair, not so easy to make sense of conflicting alerts and inputs when in the air, trying to determine what caused them. Even worse if the cause is a system malfunction of which there is nothing, no information, available to the crew.

And so, sadly, the pilots became overwhelmed to the point that hey could no longer cope. If only they would have remembered that the selection of flaps stopped the MCAS pushing the aircraft into a dive from which it eventually could no longer recover. If only the captain had briefed the F/O, handing over control, to keep using the trim to counteract the MCAS. There are so many “if only’s” in this story. Starting with “If only Boeing would have…”

In my opinion Boeing bears the lion’s share (pun not intended) of this sad story. But the airline does not come out well, either. Maintenance?

Cockpit crew management training? Adherence to procedures is such an important factor with major airlines, I wonder how good Lion Air’s crew training was. Reading this I am not getting a very high opinion of the airline.

But my thoughts go out to the families of all who lost their lives in this tragic event.

Let us hope that Boeing, and all manufacturers of aircraft and related and essential systems, as well as airworthiness authorities, will have learned the hard lessons from this, and the similar Ethiopian Airlines; crash and drawn the necessary conclusions.

There is a clear failure to understand human factors engineering here. It’s not just Boeing but also airbus, the FAA and other regulatory bodies.

With a glass cockpit having huge screen real estate to display clear messages, instead the pilots get cryptic acronyms from the 1950s and 60s. Under extreme pressure their ability to process acronyms into words and then add the missing verbs becomes much more difficult.

This plane knew exactly what it was doing and why it was doing it, but totally failed to communicate any of that to the pilots. Instead they got a confusing jumble of multiple alarms which were essentially symptoms. At no point did the plane inform the pilots that MCAS had been activated.

To build on my last comment:

Is there any reason that the following could not be shown on the pilots display:

“Excessive AOA detected.

MCAS activated and trimming the plane down.”

Even though the pilots had never heard of MCAS, They would’ve immediately grasp of the gist of the situation and been able to react correctly.

Two possible reasons:

Nobody thought of it — the system was supposed to be invisible. (Or somebody said “There should be a warning about this.” and their manager said it wasn’t necessary — possibly with a threatening side of “Isn’t your code good enough not to need warnings?”).

(cf Mendel last week) Boeing thought of it and decided against, on the grounds that there would be awkward questions if the message appeared.

Too many messages is also bad from a human factors perspective, it can lead to cognitive overload, or to people ignoring them altogether (which is why nobody reads EULAs).

What this aircraft needed was a working AOA disagree alert, set procedures to deal with that condition (#1: cut out stab trim), and a crew trained in that procedure. Or no MCAS and training in how to deal with high-AOA situations (the MCAS isn’t working any magic).

Failing that, a crew that comminicates would have saved this aircraft, too: the Captain assumed that the co-pilot had picked up on the trim problem, and the co-pilot assumed the captain had picked up on the flaps.

The problem is that this is really hard in high-stress situations, which is why a trained procedure is better.

What has been solved is the heads-down, paper-rustling search for the checklist: some aircraft simply show the appropriate checklist along with the error message on one of the cockpit displays, to be checked off item by item, putting both pilots on the same page without wasting time or focus. That’s good human factors engineering!

I absolutely agree an “AOA Disagree” warning was the minimum needed if MCAS was present.

In the absence of MCAS, given the nose up dangers, might you have needed an “AOA” Gauge to help detect the nose up under instrument conditions, plus that “AOA Disagree” light?

However, with MCAS present, an explicit “AUTO TRIM x DEG DOWN/UP” warning (if the number was say more than 0.5deg up/down), with an “AUTO TRIM DISABLE” switch to turn it off, might well have given a much more direct indication of the problem, and the solution.

Better still, the system need to never apply more than a limited aggregate correction (as opposed to the actual behaviour of resetting that limit after each manual counteraction).

Colin TD and Tammy have the finger at the root of the problem. Chip’s last comment is also telling.

Ray put it all in clear words.

But there is nothing really wrong with the use of abbreviations that originate from older generations of aircraft systems and electronics.

The problem has a lot to do (I am (was) basically a “sixties” pilot, with updates that brought my skills up-to-date with late ‘nineties electronics) with a move away from basic piloting skills in favour of being an expert in electronics..

The transplantation, if you like to call it that, of older terms and abbreviations into the newer systems made the transition easier, if not possible for the older generation.

Already then I noticed that the younger generation of pilots were way ahead of me when it came to working with the electronics, programming the FMS, setting up the navigation displays etc.

But there was also a clear move away from “seat-of-the-pants” skills to a near total reliance on computers.

Boeing attempted to hide a “fix” without imposing a procedure or system that would alert, and train pilots how to deal with a fault in that fix. And worse, without a back-up.

When that happened, when MCAS failed, the crew were not prepared for it. It also is evident to me that the airline had lax standards and crew coordination did not appear to have been high on their agenda when it came to training. Communication between the captain and his F/O broke down. I was not in that situation and I do not want to make accusations or suggestions that put blame on them. In my opinion the primary cause was Boeing’s policy to install a fix (for commercial reasons) without a secondary back-up and worse: withhold vital information from the operators (read crew). This in turn meant that they were neither trained to cope with an MCAS failure, nor even aware of its presence.

An interestingly related quote from a completely different issue: ” ‘No one at Facebook is malevolent,’ Haugen said during the interview. ‘But the incentives are misaligned, right?’ ” There’s no direct way to provide performance-based incentives for accidents that don’t happen — there’s nothing to measure — but incentives for getting a new aircraft out the door, and getting it out in quantity by convincing airlines and the FAA that it’s not all that new and that pilots can trivially transition to the new aircraft (cf Rudy’s “withhold vital information”) are easy. Ideally, there would be bonuses for individual contributors (and maybe low-level managers) who can show convincingly that an accident could happen in plausible circumstances, just as software companies pay bounties for freelancers who discover bugs — but that’s harder to do when you’re selling hundreds of units instead of hundreds of millions, and nobody has the time or access to just try to break one of those few units; aircraft companies (not just Boeing) would need to make time for engineers to try to break what other engineers have built, and that would affect the sacred bottom line.

In software companies, breaking things is a formal job (Quality Assurance); Boeing probably thinks they have one or more QA divisions, but I wonder how interconnected they are — are any of the SWQA engineers also pilots? Software products are relatively easy to test because the people who test the alpha releases are acting as end users; testing software by shaking down the hardware it controls becomes more difficult as the hardware becomes larger and more complex, which suggests that there is more need for people who know the software to have time to imagine what happens in edge cases. (Some of this comes from discussions with a former USAF electronics engineer who knows more about the software/hardware interface than I do and was scathing about Boeing’s failures of thought.)

This is a long way from the discussions of piloting that are more common here — but the process of improving performance has led to aircraft that may be as far from the first passenger jets as those were from the kites-with-motors that the Wright brothers flew. I’m not sure why raw piloting skills such as Rudy endorses have faded, given that the main channel to working for a major airline still seems to involve flying lots of smaller, simpler, and maybe more dangerous (crop-dusting? banner-towing?) planes to build hours; possibly training for a large planes needs to balance teaching systems with maintaining pilot sense? (My guess is that would make the courses take longer and cost more, again affecting the SBL.) OTOH, I wonder how much those skills are useful versus having to be unlearned on a sufficiently large and indirectly-controlled aircraft — and how much of those skills were approaching superstitions-that-mostly-worked — at best: cf. arguments from when I was learning about whether downwind turns were different, or some of the I-can-do-anything attitudes that previous columns have exposed in old pilots, or even outright strangeness like the plausible report I read of a mid-century captain who insisted on flying 100 feet above or below an assigned altitude to stay out of the “dirty air” of planes ahead of him.

A question about something I haven’t seen discussed: why was it possible for the sensor to be misaligned; is that fixable, and has it been fixed? Would delivering the sensors that couldn’t be adjusted/aligned prevent this issue? Or was the sensor installed incorrectly, in which case was the mount changed to prevent this?

Sylvia wrote about the faulty sensor last year, in February, at https://fearoflanding.com/accidents/accident-reports/lion-air-610-the-faulty-aoa-sensor/ . The sensor has an alignment pin and can only mounted one way to the aircraft, but the Florida outfit that refurbished it rotated some of the internal parts when putting it back together, and the error then passed detection because of one inadequate and one omitted test.

The problem with seat-of-the-pants flying modern heavy jets is that they require different pants than the smaller aircraft pilots may have started their flying career on. If I understand this correctly, if you disabled the MCAS on a 737 Max, and have it in level flight, and then push the throttle, you wait a while for the engines to spool up, then the aircraft pitches up because of the underslung engines, and when you return the throttle to where it was, it may keep pitching up even after the engine rpm has dropped back down. I doubt that happens on many other aircraft; hence the need for MCAS in the first place.

A well-designed indirect control is a boon here, because it allows a more intuitive translation of pilot intent to jet performance; the problem then shifts to what happens when the automation throws the towel (e.g. because the pitot tubes iced up) and the pilots have to exert a more direct control again.

I believe that at the extreme end of this path are modern fighter planes such as the Typhoon which are deliberately unstable to increase maneauverability. When flying you effectively have a computer constantly fighting the tendency of the plane to snap away from level flight, and all control is mediated via that flight controller – effectively MCAS on steroids.

For war this might we’ll be the right balance, but for domestic flight there is a lot to be said for a large failsafe flight envelope…

Perhaps there is a need to provide a check list for when the pilot cannot make any sense of the overwhelming reports- perhaps the first check would be “fly the aircraft” then in priority order what systems are imminently required to keeping the aircraft in the air safely. Trouble is that those who need to propose such a checklist in the main are no longer with us. So often the purpose and function of aircraft to stay in the air appears lost in the translasion . If my computer malfuntions I move into “safe mode” whick allows albeit limited operation of the vitals. Manual investigations can be done with minimised risk. I know this might attract some comment but what is keeping the aircraft in the air safely? Where to start, the outcome of whats recorded on the flight recorders..