Lion Air flight 610: In the Cockpit

On the 29th of October 2018, Lion Air flight 610, a Boeing 737-8 (MAX) crashed at Tanjung Karawang, West Java after departing from Jakarta. This crash was the first public sign that there was something wrong with the Boeing 737-MAX; the initial domino in a cascading sequence of events that uncovered an unbelievable quagmire of corporate malfeasance and corruption. At the time, the crash was blamed on faulty maintenance and the incompetence of the pilots. It took a second crash of a passenger flight full of innocent people, just a few months later, to focus attention on Boeing and even then, Boeing and the FAA furiously defended the aircraft. By now, everyone has heard of the massive grounding of the aircraft around the world as a result of concerns with MCAS, the Maneuvering Characteristics Augmentation System specific to the Boeing 737 MAX.

Over the past year, we’ve gone over the events leading up to that fateful day. I have tried to keep the posts as stand-alone as possible but I’m afraid that to understand the background to the situation in the cockpit, you really need to have read both Lion Air 610: The Faulty AOA Sensor and Lion Air flight 610: The Previous Flight. The primary resource for this sequence of events is the final report released by the Komite Nasional Keselamatan Transportasi (KNKT).

The flight crew arrived at the Soekarno-Hatta International Airport in Jakarta on the morning of the 29th of October in order to prepare for Lion Air flight 610 to Depati Amir Airport in Pangkal Pinang. The morning seemed routine as the flight crew did their pre-flight briefing. They discussed the taxi route, runway in use, intended cruising altitude and the fact that the Automatic Directional finder (ADF) was unserviceable. There was no discussion logged related to the previous flight or the issues logged in the Aircraft Flight and Maintenance Log (AFML). The previous flight crew had not reported the extent of the issues they had faced but if they had, it would not have mattered, as it appears this morning’s flight crew didn’t bother to check.

As they completed the Before Taxi checklist, the digital flight data recorder recorded a pitch trim of 6.6 units. Because the configuration of the aircraft will define what “in trim” means, those units can’t be used to understand if the aircraft is pitching up or down but it does allow us to have a relative understanding of how the trim changed during the flight. The units of pitch trim are constantly recorded by the FDR: I won’t list every pitch trim change, but it is useful to look at the units at key points, so we can get a feeling for how pitch trim, and thus the pitch, were changing during the flight.

The ground controller issued a taxi clearance for flight 610 and told the crew to contact Jakarta Tower. Jakarta Tower told them to line up on runway 25 left, where the crew performed the Before Takeoff checklist. The captain was to be Pilot Flying with the first officer as Pilot Monitoring.

Jakarta Tower issued the take-off clearance and the first officer read it back. At 06:20 local time (23:20 UTC), the FDR recorded that the TO/GA (takeoff/go-around) button was pressed and the engines spooled up for take-off.

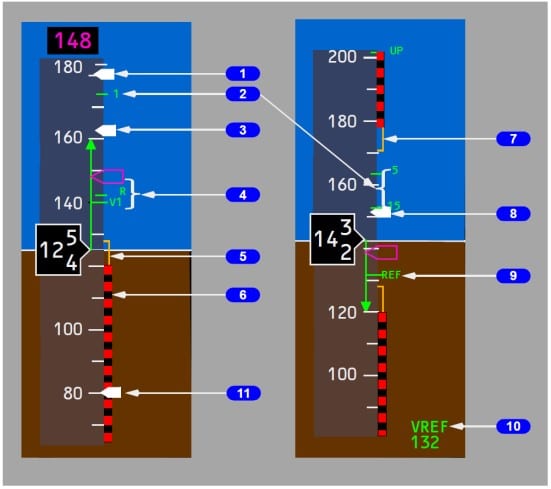

Fifteen seconds later, the first officer called out 80 knots. They did not know a faulty AOA (Angle of Attack) sensor had been installed but the problems related to this started immediately. The AOA sensors measure the angle between the wing’s mean aerodynamic chord and the direction of relative mean. As they prepared to lift off, the flight data recorder recorded a difference angle between the left and right AOA sensors. The flight director (F/D) on the captain’s display showed 1° down while on the first officer’s display, the flight director showed 13° up. Related: the airspeed on the captain’s display showed 140 knots while the first officer’s was 143 knots.

The first officer called “rotate” and the nose lifted ready to climb.

At that moment, the captain’s control column stick shaker activated.

A stick shaker consists of an electric motor and an unbalanced flywheel connected to the control column. An electrical current is sent when the AOA reaches unsafe values, which causes the stick shaker to vibrate and shake the control column. This alerts the pilot that the AOA urgently needs reducing. Once the AOA decreases, the electrical signal stops and the stick shaker stops.

The stick shaker is meant to be a warning that you can’t miss that something is wrong: effectively it means that the aircraft does not have enough energy to maintain flight. It requires an immediate response. The response could be to increase the power or to reduce the pitch (so the aircraft stops climbing and is able to increase the airspeed) or both.

So, as the aircraft reached take-off speed, the captain’s control column began vibrating violently. It would continue to shake for most of the flight.

Then the take-off configuration warning sounded. The first officer, in his role of Pilot Monitoring, called out to draw the captain’s attention to the warning: “Takeoff Config”. The captain muttered as he tried to work out what was going wrong. At that moment, the flight data recorded that the Boeing 737 MAX was pitched nose-up 7°.

The aircraft became airborne.

As they began to climb away from the runway, the first officer called out “Auto Brake Disarm” as a part of his take-off duties. Then he told the Captain that they had an IAS (indicated airspeed) Disagree, which meant the aircraft had conflicting information about the speed at which it was travelling through the air. The captain’s display showed an indicated air speed of 164 knots while the first officer’s display had an indicated airspeed of 173 knots. The IAS DISAGREE message displayed for the remainder of the flight.

The first officer asked what the problem was and whether the captain intended to return to the airport. The captain did not acknowledge or respond to the first officer’s question. It was perhaps not the best moment to ask such a question but the interaction gives us a clear first view of the cockpit management resources that day: the first officer doesn’t understand what is happening and looks to the captain for guidance, which the captain does not give.

The first officer called out “auto brake disarmed” again which this time was acknowledged by the captain. The landing gear lever moved to the UP position.

Jakarta tower asked the flight crew to change frequency to the Terminal East controller.

The first officer advised the captain that the ALT DISAGREE message had appeared, meaning that the aircraft had conflicting information about its altitude. The altitude on the captain’s primary flight display indicated 340 feet and the first officer’s indcated 570 feet. The captain acknowledged this.

The first officer switched frequencies and contacted the Tower East controller, who replied that they had identified the aircraft on the radar display and to please climb to flight level 270 (27,000 feet).

The first officer asked the Tower East controller to confirm the aircraft altitude as shown on the radar display. The controller responded that the aircraft altitude showed as 900 feet. The captain’s altimeter showed 790 feet and the First Officer’s showed 1,040 feet. It was 90 seconds since they had bcome airborne.

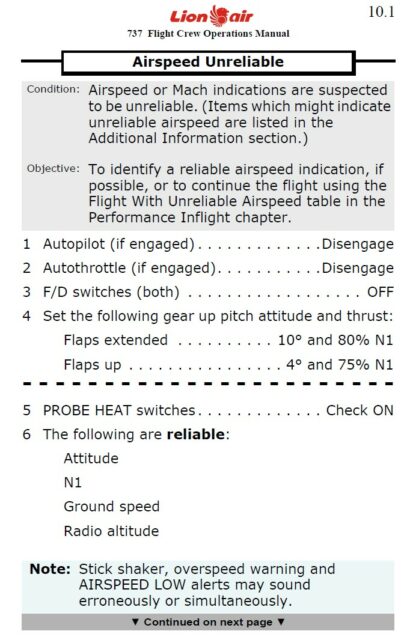

The captain asked the first officer to perform the memory items for airspeed unreliable.

The first officer didn’t respond. Instead, he asked the captain which altitude he should request from the Tower East controller. He suggested that the captain should fly downwind, which the captain rejected.

The captain twiddled the heading bug to start a turn to the left and told the first officer to request clearance to any holding point.

The first officer contacted Tower East to ask for clearance “to some holding point for our condition now”, explaining that they had a flight control problem.

The controller didn’t offer a clearance. When asked later, the controller only remembered that the flight crew had reported a flight control problem but not that they’d asked for a holding point. Communication seemed to be breaking down all around.

Everything was happening too quickly in the cockpit. Suddenly, the first officer realised they were still flying with the flaps extended and asked whether the captain wanted flaps 1, which the captain agreed. The first officer adjusted the flaps from flaps 5 to flaps 1. About ten seconds later, the captain asked the first officer to take control. The first officer responded with “Standby,” presumably meant as “give me a second.”

The Tower East controller watched the flight on the radar display and noticed that the aircraft had descended by about 100 feet. He called to ask their intended altitude; effectively verifying whether they meant to be descending.

The captain’s altimeter indicated 1,600 feet and the first officer’s indicated 1,950 feet. The first officer asked whether he should continue the flap reconfiguration. When the captain agreed, he moved the flaps into the UP position. The captain’s indicated airspeed showed 238 knots while the first officer’s indicated airspeed was 251 knots. Realising that the controller was still waiting for an answer, the first officer asked the captain if 6,000 feet was the altitude they wanted. “5,000 feet,” said the captain.

He made the call and the controller responded that they should climb to 5,000 feet and turn left to a heading of 050°. The first officer acknowledged.

That was when the Extended Ground Proximity Warning System sounded with a call of BANK ANGLE, BANK ANGLE. The aircraft roll had reached 35° right as the flaps reached the fully retracted position.

In the midst of all this chaos, the automatic trim suddenly activated for about ten seconds, trimming the aircraft nose down. This was the MCAS activating, a system which neither the captain nor the first officer knew anything about. At the same time, the horizontal stabilizer pitch trim decreased from 6.1 units to 3.8 units.

The captain shouted “Flaps 1” and the flaps began extending. The main electric trim moved the stabilizer in the aircraft nose up (ANU) direction for five seconds, which would have been the captain correcting for the nose down movement and putting the aircraft back into the climb. The pitch trim increased to 4.7 units.

This is very interesting because the MCAS stability augmentation function, meant to improve the aircraft’s handling characteristics, would kick in under very specific circumstances:

- The aircraft’s AOA value (as measured by either AOA sensor) exceeded a specific threshold,

- the aircraft was in manual flight (autopilot not engaged),

- the flaps were fully retracted.

If all three of these were true, the MCAS responded to the AOA value by moving the stabiliser trim nose down. Because one of the AOA sensors was faulty, the MCAS was incorrectly attempting to move the stabiliser trim nose down. The captain may not have understood exactly what was going on but he certainly had concluded that the issue had started when the flaps were fully retracted and responded by calling for flaps set to the initial setting. It seems likely that he set them himself as the flaps started extending immediately as he called out.

As they reached 5,000 feet, the flight officer called out the altitude. The selected altitude on the Mode Control Panel had been set to 11,000 and over the next six seconds, it was decreased to 5,000; one of the flight crew belatedly setting their desired altitude.

Suddenly, the aircraft descended at a rate of up to 3,570 feet per minute, immediately losing about 600 feet of altitude. The pitch trim was at 4.4 units. The flaps stopped extending as they reached position 1.

The captain’s control column stick shaker finally stopped. The left AOA recorded 18° up and the right AOA sensor recorded 3° down. The aircraft continued to plummet. The barber pole appeared on the captain’s flight display.

The “barber pole” appears on the pilot flight display for a quickly recognisable indication that the speed is too high or too low. The maximum operating speed, that is the maximum speed at which the aircraft is safe to manoeuvre without fear of breaking up, is called VMO. This speed has to be calculated, as it is modified by the aircraft configuration, atmospheric conditions and altitude. In most aircraft, the VMO needle which indicates the maximum operating speed has red and white stripes, hence the name.

The Boeing 737 MAX has a flat panel display which also uses the barber poles (in red and black instead of red and white) to show the minimum speed, with yellow bands in the middle to show where manoeuvring capability begins to decrease.

The low speed barber pole appeared on the captain’s pilot flight display, with the top of the pole at 285 knots. The stick shaker and the speed barber poles were both giving the flight crew wrong indications that their airspeed was too low, caused by the fact that they were being fed the wrong AOA data from the faulty sensor. Two minutes had elapsed since take-off.

The first officer asked the controller to relay the speed indicated on his radar display. The automatic trim activated nose-down again, this time for eight seconds. The Extended Ground Proximity Warning System sounded again with an urgent warning: AIRSPEED LOW – AIRSPEED LOW.

The controller reported that the ground speed of the aircraft as shown on the radar display was 322 knots. The Captain’s PFD indicated 306 knots and the first officer’s showed 318 knots. Their airspeed was not low.

Someone in the cockpit, almost certainly the captain, selected flaps five and the flaps began to extend again from position 1 to position 5. At the same time, the captain commanded nose up for five seconds, with the pitch trim recorded at 4.8 units. The automatic nose-down trimming had ceased.

I hate to split things into two without making them stand-alone but in this case, there wasn’t much of a choice. I’ll be back next week with the second half of this post in which we follow the situation in the cockpit of Lion Air 610 until the fatal crash of the flight.

There are 3 conditions for the MCAS to activate:

The mismounted AOA sensor directly caused condition 1).

In the “previous flight” blog post, Sylvia told us:

I don’t really understand why this happens, but as Sylvia explains in this post, it led directly to the autopilot getting disengaged (via the “unreliable airspeed” memory item), thus fulfilling condition 2).

This meant that it was enough for 1 thing to fail to get 2/3 of the way to disaster, even though it looks otherwise at first glance.

It’ll be interesting to hear next week why the aircraft started sinking. On the previous flight, the third pilot in the cockpit had directed attention to the trim problem, which eventually led the captain to CUT OUT the STAB TRIM. I expect that imminent danger of the aircraft sinking is probably going to prevent this crew from following that same line of reasoning, though I wonder what’s going to happen with the flaps.

With so many conflicting indications it is easy to see how the crew lost the overall “picture”, the overview of what was going on. Which would have been essential to avoiding the crash.

Add to conflicting indications the malfunctioning MCAS, which was a patch to an inherent aerodynamic problem. Then add that the pilots were neither even aware of nor trained to respond to a MCAS malfunction and it becomes clear that an accident was all but unavoidable. The cockpit crew coordination and communication in general seemed to have been somewhat lacking, but that was probably not a contributory factor to the crash

I think the software should make highly visible alerts with suggestions and condition reports, but not take over the handling of the plane. That seems like a very bad idea. Sensors fail, programs have bugs, the humans should be allowed to have control at all times that autopilot is disengaged.

Boeing generally seems to share your attitude, but in this case to be able to claim there’s no need to retrain 737 pilots, they decided to include MCAS in the control loop as an “invisible helper”. If the system was meant to be troubleshot by the crew, the retraining would have been obligatory, which would have cost Boeing $1 million per aircraft delivered to American Airlines.

I get the feeling that with that FO, the captain was basically in the plane by himself. I think the FO was good for holding down papers, but not much else.

Am I wrong?

Correct me if I’m wrong here. There are two AOAs in the MAX but MCAS is configured to use only one. It is important to explicitly mention here that the MCAS was using the ‘faulty’ AOA and not the other one. That was a significant part of the whole problem.

Related to that a couple of points worth noting :

Boeing tested two potential failures of MCAS: a high-speed maneuver in which the system doesn’t trigger, and a low-speed stall when it activates but then freezes. In both cases the pilots were able to easily fly the jet. Safety analysis In those flights they did not test what would happen if MCAS activated as a result of a faulty angle-of-attack sensor – a problem in the two crashes (Lion Air and the Ethiopian one).

Found that mentioned in a New York Times article.

Another point :

Also Mr Forkner who the chief technical pilot overseeing the development of the Max did not actually fly the plane. The NY Times article mentioned the following — “Technical pilots at Boeing like him previously flew planes regularly. Then the company made a strategic change where they decided tech pilots would no longer be active pilots,” Mr. Ludtke said.Mr. Forkner largely worked on flight simulators, which didn’t fully mimic MCAS.”

A few very interesting and valid comments have been made here.

Yes, it should be a “given” that pilots can assume control without their actions being countermanded by a faulty system.

The F/O was possibly not very experienced, but the captain was overwhelmed by the multiple and conflicting alerts. Cockpit crew coordination seemed to have broken down.

And Jay’s comments are telling. A malfunctioning sensor effectively taking control? That should never have been allowed to happen.

Part of the problem is that the 737 is an old “fly-by-cable” design, and the 737 MAX has been retrofitted with “software control” in the form of MCAS to allow 737 type certificate holders to fly it with no more training than watching a video; this was so it sold better.

The Airbus jets have had software control (“envelope protection”) designed into them from the start, and that design and the accompanying procedures appear to be quite safe.

The 737 MAX might have been as safe (and probably is, now it’s flying again) with the proper cockpit displays (working “AOA disagree” alert on every aircraft) and proper procedures trained to disable it (turning the electric trim off did it in the old version, I don’t know what the “new and approved” design looks like).

IIRC, “AOA disagree” would be on top of an option: having a 2nd AOA detector at all. I agree that having a warning would be a good thing, although having 3 sensors and cutting out the bad one might be even better. (The Apollo lunar lander had five computers, plus a mechanism to compare their results to exclude one if it disagreed with the other four.) And Boeing’s schtick was that transitioning to the MAX was trivial; adding training would have involved letting engineering overrule marketing, where (from what I read) the marketroids took over the company some time ago.

I understand that the 737 MAX always had two AOA sensors fitted by design; this was not an option.

Aviation Today, May 6th 2019:

Thanks, Sylvia for your hard work. You put us in the cockpit of this doomed flight (and now you leave us there for one full week :). As software designer myself, I’ve seen things you would’nt believe :). For me allowing the software to change aircraft configuration when two inputs that should agree, in fact disagree is a fatal flaw. I do not understand why someone in his right mind may specify this: “The aircraft’s AOA value (as measured by either AOA sensor) exceeded a specific threshold,” it’s a nonsense. If two signals that must be equal are different, the lack of a third one must invalidate the rule. And this brings to me a question (also referred to the AF447): Should’nt it be possible that all aircrafts have a “reduced panel of instruments”, as mechanical as possible, with only airspeed, attitude, altimeter, turn coordinator, heading, VSI and engines regime (and, maybe, flaps and landing gear indicators) and a switch to obscure all the other indicators (or better, the glass covering them will become opaque by flipping a switch). No stick shaker, no alarms, no ground altimeter, no overspeed horn… nothing. Pilots can then concentrate in flying the plane. And, once the aircraft is stabilized (at least using mechanical inputs only), then they switch everything (or maybe, everything but the alarms) on. And, of course, in case of fly-by-wire, commands must respond exclusively to pilot inputs (coming from one of the pilots only).

The software was using input from only ONE sensor and not both. So it never had another value to compare it to, so it didn’t even know it was a faulty sensor. Originally the MCAS did use two sensors but somewhere down the line it was changed to use only one. I haven’t yet found an article describing why but I’m sure its out there.

Here’s a blurb from one of the articles out there describing this (including link to article in the bottom) :

“At first MCAS wasn’t a very risky piece of software. The system would trigger only in rare conditions, nudging down the nose of the aircraft to make the Max handle more smoothly during high-speed moves. And it relied on data from multiple sensors measuring the plane’s acceleration and its angle to the wind, helping to ensure that the software didn’t activate erroneously.

Then Boeing engineers reconceived the system, expanding its role to avoid stalls in all types of situations. They allowed the software to operate throughout much more of the flight. They enabled it to aggressively push down the nose of the plane. And they used only data about the plane’s angle, removing some of the safeguards.

A test pilot who originally advocated for the expansion of the system didn’t understand how the changes affected its safety. Safety analysts said they would have acted differently if they had known it used just one sensor. Regulators didn’t conduct a formal safety assessment of the new version of MCAS.

Single sensor

The current and former employees, many of whom spoke on the condition of anonymity, said that after the first crash they were stunned to discover MCAS relied on a single sensor.”

https://www.irishtimes.com/business/manufacturing/fatal-flaw-in-boeing-737-max-traceable-to-one-key-late-decision-1.3912491

As a long-time (now retired) software engineer, I mostly agree with the above comments about proper design — but I also note that software design is subject to GIGO (garbage in, garbage out). If you give someone a very limited set of parameters and tell them “solve this problem”, they may not have the authority, the time, or the imagination to deal with problems outside that envelope.

This assumes that the coders are suitably cautious. I couldn’t tell you how many bugs fell into my lap (because I owned a couple of functionalities that touched on a lot of others) that turned out to be somebody giving somebody else memory to write an answer in but not telling how big it was, and somebody-else assuming they could write anything in that memory and overrunning into somebody-else^2’s memory. This particular error is probably less common in post-1980’s programming languages (some of which have something similar to the overrun zones now mandated at large airports), but I suspect those languages leave room for even harder-to-find errors.

Thanks, CHip. The B737 max issue was no the case of software error (which may go undetected for a long while), but specification error, clearly visible for anyone who knows what is broadly call as “Good Engineering Practices”. There is no need to understand the subtilities of the “stack overflow”, to see that if a system has two different inputs that must agree and it has also a subsystem to arise a caution flag when they are different, taking a decision using only one is equivalent to override the dual input. It was clearly the case of Garbage IN. Boeing is of course at fault, but not the programmers, the management. And, of course, the FAA. This arises an interesting discussion (although probably not here) about what should be the inspection level of the FAA, and not only for software (It has no sense that they rely on the manufacturer tests, this is again, against Good Engineering Practice). For instance, should the FAA approve the tyres (thread, slippage, temperature) or should they approve their internal structure and materials also?

My thought here is that this “error” might have been by design; obviously that’s a speculation at this point, but we do know that Boeing management was very keen to sell the 737 MAX as an upgrade that required no retraining.

The FAA would have noticed that the MCAS can fail if the documentation had specified the use of two sensors and a failure mode; there would need to have been a process/checklist for pilots to follow when that happened, and they’d have to be trained in it. It seems that the FAA overlooked this. And it’s certainly helpful if no FAA agent ever sees the “AOA disagree” alert in any of the test flights, because then it would be obvious that it, too, would need to trigger a process. So by keeping the MCAS restricted to one sensor, and the alert “accidentally” turned off, the FAA never noticed that MCAS really does require pilot training when they certified the type. Which happens to have been very convenient for Boeing and its main goal.

So, incompetence or malice? I haven’t made up my mind yet.

I am in agreement with Mendel, but I do not want to call it “malice”. More like a victory of the bean-counters over engineering and good practice.

Malice would mean some kind of intent, and that is just one step too far.

But Boeing certainly seems to have been able to pull the wool over the FAA’s head during the certification process. And it would seem obvious that Boeing abused its until then very good relationship with the FAA for its own commercial interest. The FAA seems to have sleepwalked into a trap set by this cosy arrangement, too cosy as it so turned out.

The company will overcome its financial loss. The families who lost loved ones will never overcome theirs.