Lion Air 610: The Faulty AOA Sensor

Previously, I stepped through the maintenance of the aircraft registered PK-LQP, which was destroyed in the crash of Lion Air flight 610 on the 29th of October 2018.

From that post, it is clear that the aircraft was released back into service with a faulty AOA sensor on the left (Captain’s) side. Now I’d like to look at that sensor and piece together what happened.

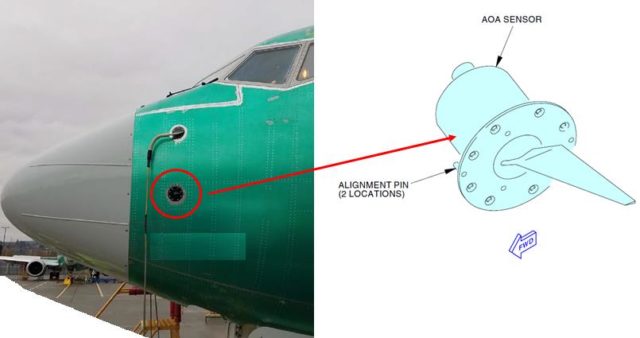

The Boeing 737-8 (MAX) has two independent angle of attack (AOA) sensors which are installed just behind the nose on either side of the aircraft.

In basic terms, the angle of attack allows us to understand the amount of lift the wing is generating. The key external component of the AOA sensor installed on the Boeing 737 MAX is a vane which rotates to align with the airflow. The vane is connected to two internal resolvers, each of which independently measures the rotation angle. The vanes then provide the measured angle of the direction of the airflow relative to the fuselage.

One resolver is connected to the Air Data Inertial Reference Unit (ADIRU) computer for its side (left or right) and the second resolver is connected to the respective Stall Management Yaw Damper (SMYD).

The Air Data Inertial Reference Unit combines and measures various information to provide inertial position and track data, as well as attitude, altitude and airspeed data, to the flight deck displays. So the data from the left-side AOA sensor is processed and passed on by the left side ADIRU to the captain’s flight displays.

The Stall Management Yaw Damper (SMYD) uses the information from the other resolver to calculate and send commands to the Stall Warning System. The left SMYD, with information provided from the left AOA sensor, will activate the captain’s stick shaker based on a number of factors, including an excessive angle of attack as measured by the AOA sensor.

SPD Flag (amber) means the computed airspeed indication is inoperative.

In the post about the maintenance history of the aircraft, we saw that the left-side AOA sensor was replaced on the 28th of October 2018 after multiple reports of speed and altitude flags appearing on the Captain’s primary flight display which had been connected to maintenance messages of faults related to the SMYD.

The sensor which was removed was returned to Batam Aero Technik (part of the Lion Air Group), who handed it over to the investigation after the crash.

The Component Maintenance Manual required that the test be done using the output from Resolver 1. Resolver 1 was fine and the AOA sensor passed testing when its output was used. However, when Resolver 2 was tested, the recording instrument could not interpret the output.

A further resolver accuracy test was performed with the internal heaters operating, to determine if unintended electrical coupling was occurring between the heaters and the resolvers.

The first two measurements taken on Resolver 2 showed that the values were unstable similar to values observed in previous resolver accuracy testing. Once the unit warmed up with the heater operation the unit resolver 2 output stabilized and was within the CMM performance requirements. The remaining Resolver 2 values were found within limits. The first two measurements were re-taken and were found within limits. The vane and case heaters were turned off and the values for Resolver 2 went unstable after 12 minutes and 51 seconds. The sine and cosine signals (observed on an oscilloscope) were also being observed and went to zero when the API output went unstable.

That is, the output from Resolver 2 was correct when the internal heaters were operating but stopped working again when the temperature dropped.

The investigation discovered that a loose loop of the very fine magnet wire from the primary rotor coil was trapped in the epoxy which was meant to hold the end cap insulator in place. The trapped magnet wire thus adhered to both the end cap insulator and the rotor shaft insulator which had very different coefficients of thermal expansion (CTE). As the wire expanded and contracted to the two different environments, the wire became fatigued and showed multiple ridges and cracks before breaking. The wire failure created an intermittent open circuit, dependent on temperature. The sensor worked fine at temperatures above 60°C (140°F) but failed at temperatures below that.

This explains the ongoing issues in the weeks before the crash. However, a new AOA sensor was installed on the left side in Denpasar. This sensor could not be tested in the same way, as it was completely destroyed in the crash. The only course open to the investigators was to track the maintenance history of the sensor.

The sensor had previously been installed on the right side of a Boeing 737-900ER operated by Malindo Air. Malindo Air’s maintenance had removed the sensor on the 19th of August 2017 after maintenance reported that the SPD and ALT flags appeared on the first officer’s pilot flight display during a pre-flight check. Analysis of the aircraft data showed that fifteen flights had recorded “no computed data” for the right flight director parameter, so presumably the SPD and ALT flags had appeared fifteen times on the first officer’s display before the sensor was replaced.

The sensor was returned to Batam Teknik who sent it to Xtra Aerospace in Florida to be repaired.

The Work Order at Xtra Aerospace noted that the sensor had been removed as the speed and altitude flags had displayed and the speed and altitude indications had not appeared. The preliminary inspection showed that the unit was in “fair but dirty” condition. It failed the operational test which was recorded as caused by an eroded vane causing erroneous readings.

They disassembled the unit, replaced the vane, calibrated the unit and put it back into testing. The work order notes that the required tests were satisfactory. Xtra Aerospace approved the unit for return to service in November 2017 and returned it to Malindo Air who returned it to Batam Teknik in December. That unit was then sent to Denspasar on the 28th of October 2018.

The investigators went to Xtra Aerospace to review and document the maintenance records, the test equipment and the procedures used during the repair process. The interesting thing they discovered was the process of the vane-slinger-shaft assembly removal and replacement, as per the Component Maintenance Manual.

During this process, the resolver gears and damper gear are disengaged from the main gear. This allows them to rotate independently, as the main gear is fixed to the vane shaft. The main gear is then removed from the vane-slinger-shaft.

So there is nothing to keep the resolvers in their original position and they can independently rotate to a new position.

The angle is verified in an Alignment Accuracy test which is measured using an Angle Position Indicator. The Component Maintenance Manual specifies a specific Angle Position Indicator but notes that equivalent substitutes may be used. The Angle Position Indicator used by Xtra Aerospace offered a level accuracy equal or better than the recommended unit and the FAA had approved the equipment as in conformance with the Component Maintenance Manual.

However, that Angle Position Indicator had an extra function, which was a relative mode for testing. The equipment in the manual only allowed for absolute testing and so there was no reference to relative mode. Xtra Aerospace did not develop any specific instructions for the use of their Angle Position Indicator, which would have drawn attention to the existence of the two modes. The FAA should have required this to be documented but had overlooked the lack of a written procedure.

As a test, the technicians developed a procedure to test whether a 25° bias could inadvertently be introduced into both resolvers as a part of of the vane-slinger-shaft replacement.

Resolvers are calibrated to output 45° when the vane is at its zero position. If the Angle Position Indicator is set to absolute, then the resolver output reads as 45°. However, if the switch is then moved to the relative postion, the Angle Position Indicator reads as 0°, as the 45° offset has been established. If you move the AOA vane to a new position, the the Angle Position Indicator will display the actual angle -45°. The 45° offset is constant through the full range of the vane rotation as long as the REL/ABS switch remains in the relative position.

In the test in which the technicians introduced a 25° error, they found that if the REL/ABS toggle switch (relative/absolute) in the Angle Position Indicator was selected to the REL position, then the unit would pass testing, even though the angles recorded after the testing showed a 25° bias over the full range of vane travel.

It is impossible to say for certain whether this is what happened to the replacement AOA sensor. However, the investigators were able to demonstrate that in this case, an AOA sensor which was calibrated and tested could result in an equal bias introduced into both resolvers. This bias was not visible during AOA sensor calibration and was not detected in the return-to-service testing as required by the Component Maintenance Manual (CMM).

In February 2019, Collins Aerospace repeated the Peak API offset demonstration at its facility. The test was repeated for the benefit of NTSB and FAA personnel who did not witness the original demonstration at Xtra Aerospace. The procedures followed during the February 2019 demonstration were fundamentally the same as those performed at Xtra Aerospace in December 2018. The conclusions were identical. First, that an equal offset could inadvertently be introduced to both resolvers. Second, that the magnitude of the offset is essentially random. And third, that the offset could go undetected through the CMM return-to-service tests.

All it took was for someone to accidentally toggle (or forget to reset) a simple switch from absolute mode to relative.

When the sensor arrived in Denpasar, the Batam engineer replaced the left AOA sensor. He did not have the equipment for the recommended installation test and so had to use an alternative method, which involved deflecting the vane to the fully-up, centre and fully-down positions while checking the SMYD computer for each position. The engineer said that the values displayed for each position were correct, however, contrary to company policy, he did not record them.

After the crash, he submitted photographs of the SMYD display to prove that the AOA sensor passed the installation test. The timestamp on the photographs was earlier than the spare part had arrived at the premises and investigators were able to show that the images showed a different aircraft. Thus, there’s no record at all of the results of the installation test.

If the AOA sensor had been shipped with the revolvers offset, then this should have been obvious from the SMYD display, which would show the misalignment angle or, at its maximum lower stop, AOA SENS INVALID. Investigators tested the offset at 33°, 21° and 20°. In every case, the sensor failed the installation test.

The flight recorder recovered from the wreckage showed that the left-side AOA sensor showed a value approximately 21° higher than the value given by the AOA sensor on the right.

It’s hard to believe that the engineer conducted the installation test and checked the values from the SMYD. It seems much more likely that he never conducted the AOA sensor installation test and released the aircraft into service with the misaligned AOA sensor.

I must admitth that I am getting out of my depth here. AOA indicators were installed in the later aircraft that I flew, but they were not nearly as essential for the operation of the aircraft as for the management of subsequent models of aircraft with more complex automation and associated electronics. The Citations of those times were equipped with a sort of cone that protruded from the side of the nose section. Two slits in the cone were exposed to the airflow. The difference between the pressure in the slits was measured, causing the cone to rotate. The state of equilibrium was presented to the pilots on the flight director as the angle of attack. And always compensatied for the actual weight of the aircraft.

This was particularly useful duing final approach: with the AOA centered, the aircraft would be at VRef. An electronic bias was fed in, corresponding to the flap setting, so the indication would be correct at any flap selection. The indications were only related to “below”, “above” and of course “at Vref”. During climb, cruise or descent the AOA was not used.

If the AOA did not work – which happened only once to me, during an approach in a snowstorm and gusty winds: the vane heater had failed – the crew would use the Vref as extracted from a simple graph that would be carried in the cockpit, usually a hard copy as part of the checklist. That was the standard procedure, the Vref as computed by the AOA and displayed on the F/D was just a little extra.

I already admitted that in this case I am a full generation (of pilots) behind. So I can only speculate in the hope that someone (Mendel?) will be able to correct me if I got it wrong (again).

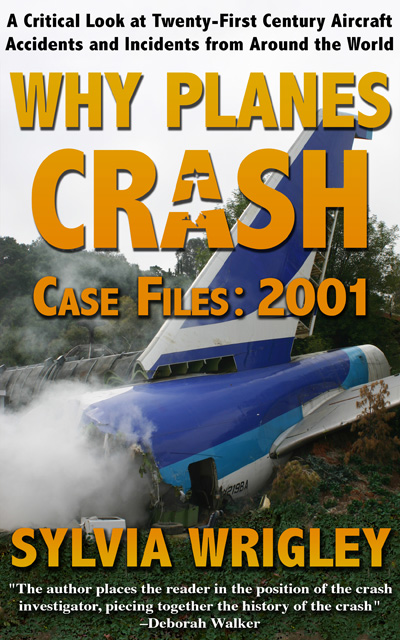

What strikes me is that Sylvia obviously has made a very clear and professional analysis of the AOA system and the role it played in two crashes.

She also explained how maintenance procedures were not properly followed, vanes released to service after tests could not be carried out because the systems of the overhaul base did not have the means fo carry out full complete testing and how maintenance staff in the field either were unable to carry out a proper test, or were insufficiently trained.

It also seems to me that with increasing reliance on electronics, pilot training is putting more and more emphasis on the management of the electronic systems, maybe at the expense of “stick-and-rudder” skills.

Training of a pilot on a sophisticated modern airliner is a very expensive business indeed. Airlines are in the business of transporting assengers and goods by air. A very competitive industry so, if a pilot wants to enhance his or her basic flying skills, (s)he can join a flying club, do recreational flying in their own free time and, of course, pay for it themselves.

But the big grey beastie in the room, the one with a trumpet in its long nose, is not mentioned:

If the problem is caused by a faulty AOA system, or not properly understood maintenance procedures, why is the 737 Max still not back in the air?

Manufacturing faults in the AOA vane assembly? Get back to the manufacturer and sort it out. Sue them if necessary.

Maintenance at fault? Improve procedures, issue airworthiness directives and get the FAA to back it. The CAA of other countries will fall in line.

Modification? Installation of a third vane system as a back-up? Not a small task, but it could probably have been accomplished by now.

Training? Cockpit- and maintenance crew could have been trained to recognise the problem, avoid a recurrence. Maintenance by being far more cautious when releasing a unit back to service.

Boeing must have lost a colossal fortune by now. Airlines are suffering too, some had to curtail routes, delay planned expansions and the situation surrounding the Corona virus is making things even more difficult.

Crew: Hiring suspended, lay-offs. Misery.

So WHY IS THERE STILL NO SIGN of the MAX returning to service?

Who is hiding what and what is it they are hiding?

I think it’s because regulators have discovered Boeing is now a complete disaster, and that they have to check everything that was previously self-regulated by Boeing.

An example is the recent Commercial Crew capsule flight fiasco. First, it flies with the wrong timer setting, which was investigated, resulting in finding a host of other previously-unknown issues, including one which might have caused the service module to thrust back into the capsule after separation. That would be really bad since damaging the heat shield could kill the crew.

Quote:

“The real problem is that we had numerous process escapes in the design, development and test cycle for software,” said Doug Loverro, NASA associate administrator for human exploration and operation.

So NASA is saying Boeing apparently doesn’t know what the hell it’s doing.

I assume the FAA is experiencing a similar situation.

Gene has touched on the scope of the problem; I’ll expand.

Perhaps the FAA (and similar authorities in other countries) feels that Boeing should demonstrate that the failure of a part the manufacturer added to compensate for a bad design should not make the design behave worse than it would without this part? It’s one thing to acknowledge that a new design requires additional skills, even stick-and-rudder skills — although Boeing declined to do this; “fixing” that design with something that turns an airplane into a bucking bronco, with no clear indication of what’s going on, is a failure that can’t be coped with simply by promising that the sensor that triggers the behavior will always be correct (a promise that nobody should believe — that’s why backup systems exist). Boeing seems to have tried to cheat — or cheap out — the certification process; now that they’ve been caught, every stone the FAA turns over seems to reveal another can of worms.

There was an article some months ago arguing that when Boeing (which it saw as being driven by engineers) bought McDonnell-Douglas (which it saw as being driven by modern-practice business people), the M-D practices ended up overriding what Boeing had been doing. It’s all very well to argue that some engineers are so focused on perfection that they become the enemies of good-enough — but who decides what is good enough? I occasionally approached this issue in 20 years as a software engineer, but the code I was responsible for generally just failed under edge conditions rather than putting lies in documents or telling a physical engineer that a part would fit or be strong enough when it wouldn’t, and there were trail files that usually recovered work lost in a crash; there’s no way to re-run the clock on an airplane crash and redirect at the point where something started to go south.

I wouldn’t be surprised if \somebody/ were covering up \something/ at Boeing, but I doubt that’s a major issue. I see two major issues with any large corporation: there’s so much to know that nobody knows more than a fraction of it, and there’s so much to do that people can rely on other people not only to do their defined bit but to deal with difficulties from other areas. There’s a joke that goes back at least as far as my start in computer work 40 years ago; the part I still remember is ” ‘How many hardware engineers does it take to change a light bulb?’ ‘None; it’ll be fixed in software.’ ‘How many software engineers does it take to change a light bulb?’ ‘None; it will be explained in documentation.’ “. ISTM that the hardware was dicey, the software was not sufficient to handle unexpected conditions(*), and the documentation was inadequate and/or was not presented to airline management as something critical for every pilot to know; in a large corporation, the process of passing off responsibility can have so many leaks that finding all of them — or even enough to make sure that the remaining ones are drips rather than torrents — takes time.

(*) this step especially irritates me as a software engineer; I can’t tell you how many problems I had to fix because somebody’s code gave somebody else’s code a space to write an answer in without saying how much space there was, causing something else to be trashed when the answer was too long for the space. Given that most of the old code was written by mathematicians to very primitive standards instead of to even vaguely current ones (using practices from ~1970 that were known not to be rigorous before my employer shipped its first release in the late 1980’s) rather than by trained software engineers, this is not surprising — but Boeing’s engineers should have done better even if their issues were more subtle; handling exceptions gracefully (if not elegantly), or at least failing cleanly instead of going into spasms, is responsible design&coding rather than an indication of genius.

Some of the stories Sylvia brings us have a point failure (e.g., putting a trainee on a busy flight control desk without proper backup), but most have a long sequence of failures that too often end up with bent metal if not bodies. In this case, failures that killed hundreds took place over months and years rather than minutes and hours, and involved orders of magnitude more people; finding and fixing enough of the failures to get reasonable assurance that the MAX is flyable is going to take time.

” Analysis of the aircraft data showed that fifteen flights had recorded “no computed data” for the right flight director parameter, so presumably the SPD and ALT flags had appeared fifteen times on the first officer’s display before the sensor was replaced.”

Um, that’s not good.

“However, that Angle Position Indicator had an extra function, which was a relative mode for testing. The equipment in the manual only allowed for absolute testing and so there was no reference to relative mode. Xtra Aerospace did not develop any specific instructions for the use of their Angle Position Indicator, which would have drawn attention to the existence of the two modes. The FAA should have required this to be documented but had overlooked the lack of a written procedure.”

Um, that’s not good either, that’s egregious.

I think gamers should be consulted regarding how create a simple display that alerts the flight crew to the fact that key switches are set in positions that should be reviewed. Games are very sophisticated nowdays, as modern aircraft are, and the amount of information available to gamers in the graphics is truly impressive in some games.

What concerns me is the increasing complexity of aircraft, computerized devices of all sorts and the complexity of life in these times. Aircraft are, by nature, complex far beyond driving an earthbound vehicle. Even a simple aircraft can get away from its pilot if there’s a lapse of attention. The systems of a transport category aircraft are much more complex than the average single pilot General Aviation aircraft and that complexity affects the pilots and the technicians working on the planes.

As complexity increases, so does the need for specialization. I could perform most of the tasks involved in working on a 100 Series Cessna with little more than a printed maintenance manual and a way to research ADs, but in the airlines, it required a lot more paperwork, much more comprehensive manuals and there was a lot more specialization. The avionics techs and the NDT guys were probably the most specialized of us all, and that was in the days of 727s and MD-80s.

Towards the end of a C check, the avionics crew would usually be doing their work, checking calibrations, etc. It was fascinating to see, but even on those pterodactyls, a specialized task that I wouldn’t have wanted to do unless I had specialized training on those specific tasks and knew my way around the equipment.

But how about the Line mechanics that work under continuous pressure to stay on schedule? I wonder if some of these very specialized tasks are some under pressure to stay on schedule and if things appear to be ok the plane is returned to service, even though the testing is not as thorough as it should be. One could conclude that is possibly what happened in this case.

It sounds like a fairly complicated procedure and, while the guys that live and breathe avionics might be very comfortable with it, some Line Tecn working under pressure could easily miss some vital detail. I’m not stating that is absolutely what happened in this case, but it seems a reasonable possibility.

Rudy makes an interesting point, if everything is so cut and dried, why does the MaX 8 remain on the ground? Is Boeing in a disheveled state? It’s a big question. I have concerns that Boeing has reordered its priorities and perhaps has not kept safety in its rightful position. A lot of American aviation manufacturers are no longer with us; I’d hate to see Boeing end up just a memory. I don’t think that will happen, but I think they need to bat 1,000 from now on, or they could end up in serious trouble.

Beyond that, I think that the entire industry needs to examine its priorities and realize that as complexity grows, so does the potential for unforeseen problems. The 737 Max 8 used a computer to compensate for physical design changes that had an impact on flight characteristics. It worked great, except for the times that the computers were apparently getting inaccurate information. The pilots apparently didn’t know why things were happening as they were and there were tragic results. Computers are magnificent tools, but the added complexity of the MCAS system apparently left pilots in a mystifying situation. In arrears, it seems obvious, but in real time it must have been terrifying and even the tech that replaced the AoA sensor probably had no idea that a problem with calibration could have such dramatic effects, which feeds back into my initial point about complexity. There comes a point where it becomes very difficult for any one person to understand the complex interactions of a system. It is my opinion that we need to keep technology as our servant and not allow it to become our master.

” I have concerns that Boeing has reordered its priorities and perhaps has not kept safety in its rightful position.” It’s pretty clear that happened; there were headlines some months ago about emails referring deprecatingly to who was in charge. The question now is whether Boeing can fix itself.

Yes Mark, as the old saying goes: “To err is human, to really screw up you need a computer”.

I don’t know enough about the inside-outs, but I did read about changing work ethics and silencing whisltle blowers. When the unions started to flex their muscles, Boeing simply opened another factory in a very different part of the USA and hired new staff. This compromised the standards as more and more work was transferred to people who did not really meet the same standards of knowledge and experience of the old workers.

Worse, the same management that accomplished to degrade the standards and level of supervision also managed to worm their way into the FAA and persuade them to allow Boeing to take on a lot of the oversight and supervision and largely regulate themselves.

Money became the overriding factor, more so as Boeing had to face off the increasing competition of Airbus.

The aircraft manufacturing industry has become a very murky playing field with strategic alliances (witness Bombardier), again more aimed to maintain or increase market share, rather than quality and safety.

And yes Mark, we must keep technology as our servant. The later Citations that I flew, like the 550 Bravo, already had more electronics than an airliner of only 10 years before. It included a computerised GPS based navigation system. Airlines, with a fixed route system, will have the routes commonly flown by a p;articular type of aircraft stored in the data base. A corporate jet will operate on the basis of : “we go to where the boss happens to need to be”. My F/O often was a younger recruit, far more adept at programming the nav system than I was. But I often had to call them to order: He (or she) would be busy entering the entire route into the computer on the way to the runway. As the Citation has no APU, it had to be done after the engines had been started.

It often took a long time, my priority was to get airborne, not sit at the holding point letting other aircraft pass us by. It was surprising to see how much focus went into programming the aircraft computer system.

Often I has to tell them:” Enter the SID, maybe one or two waypoints and the destination, and we will sort the rest out in the air.”

Simple, but the currrent computer generation seems to concentrate on the programming, not on the priorites of “getting there”.

Maybe I should take a bit more time, rather than dive straight into the deep end. But, sorry, I enjoy writing “off the cuff” and see where it gets me.

Chip made a very good argument; I made a vague reference to the change in corporate culture, Chip gives a comprehensive summary of the bigger picture, including an insight in how software engineers work. I agree totally with him, so there is no need to repeat.

Just one thing that may warrant a comment: Chip refers to the “fix” that “…turns an aircraft into a bucking bronco”.

Here he is on to something that I think (here I modestly turn and wave to the crowd!) I mentioned in one of the early discussions:

When the Fokker 50 was released, it was initially designated as a “F27-50”, in other words: a common typerating and a lot of sundry savings of money. But the Dutch RLD (the CAA of the Netherlands), probably in consultation with counterparts in other countries, abandoned that idea. It became the Fokker 50, a new type designation, this in spite of a large number of commonalities. The differences were deemed to be of too much a magnitude to allow a common rating, even if the flight characteristics were not really different. OK, the cockpit had been comprehensively redesigned, different engines (RR Darts were replaced with P&W 125, the pneumatic system became hydraulic, a more effective de-icing system was installed, etc.) and so it was a different type.

With the -Max, Boeing decided to stick to a common type. And the comparison with the F27/F50 is in reality not valid.

Whereas Fokker developed a good aircraft into an even better one, with excellent flight characteristics, modern (for the time) avionics and greatly improved economy, not to mention the disappearance of the characteristic high-pitched compressor whine, Boeing made a “hash”, a pig’s ear, out ot the 737 when they developed the “Max”.

The fatal mistake was not to develop a new type. Possibly because Boeing (and the airlines) needed a “quick fix”, an intermediate type that would not require much in the way of introduction. Boeing already had its hands full with the assimilation of the 787 Dreamliner.

So they stuck with the same cabin section, the same basic design of the retractable stairs, a very similar wing and landing gear. But that required a repositioning of the new much larger and much more powerful engines. For sufficient ground clearance they had to me moved forward.

The “bucking bronco” was born: at high power settings the engines gave a “nose-up” impetus. Another factor was that the engine nacelles in climb created lift, causing an additional, uncommanded “nose-up”.

In order to control the aircraft the engineers resorted to the MCAS, a system that has been proven to be dangerously unreliable.

The big problem is,of course, that without MCAS the Max will be very difficult to control, even if the pilots are aware of the problem.

Back to Chip and Mark, they explained it all brilliantly !

Oh, Chip, Mark and to whomever this may apply:

The “Max” was introduced by Boeing as just an upgrade of the old and trusted 737. Okay, bigger and with more powerful engines but essentially the same aircraft. The certification process would have been fairly simple and the FAA would have taken Boeing’s word for it. The lax oversight gave Boeing the “soft option”. To compensate for aeodynamic peculiarities in the climb, Boeing opted for the installation of an electronic system that can fail, and HAS failed. With catastrophic consequences.

BUT – and I am now coming at the five-hundred-million-dollar question:

Suppose that the Max was introduced as a new type, even with the lax oversight from the FAA surely this would have led to a much more stringent certification process, the certification would have been subject to much more scrutiny, agree?

Suppose that it emerges that the Max, a new type of aircraft, has the engines placed so far forward that during the climb they tend to push the nose up and thus increase the angle of attack,

Suppose that it emerges that the increased angle of attack at certain speeds causes the engine nacelles to start providing additional lift, causing an uncommanded further increase in the nose-up tendeniny,

Suppose that this situation can get out of control so rapidly that a new system, called MCAS, has to be installed to prevent the crew from losing control…

Under those circumstances, would the FAA issue a type certification for this aircraft, being a new type?

Or, assuming that they still are willing and able to act responsibly, would they demand a very rigorous re-design of the entire aircraft?

Involving, probably, a new stairs or abandon the retractable unit altogether; but this self-sufficiency was a sales argument for airlines operating from small airports with limited service infrastructure.

It also would involve the re-design of the landing gear allowing for increased ground clearance and

with the aircraft sitting higher, most importantly move the engines rearwards to eliminate the nose-up tendency. MCAS still has proven to be useful, e.g. in the KC46 tanker. Boeing could use this as an argument that MCAS was existing technology, thus bypassing close scrutiny from the FAA.

CAVEAT

The solution that I put here on the forum is purely the result of what I read and is entirely of my own making. I may be totally wrong, the redesign that I suggest here has not been put forward by anyone. But I would look forward to reactions.

There certainly would have been a lot more work to get certified — possibly even an outright refusal, even from the too-tied-to-the-industry FAA. The added time and costs would have affected profits, which would have been a problem for the types apparently now in charge at Boeing. There would also have been much less interest from the airlines, which (per Wikipedia, there were almost 5000 737-MAX ordered); many wanted an aircraft that didn’t require additional pilot training, and Southwest (possibly owner of more active 737’s than any other airline), which flies only 737’s (IIUC as a way of keeping fares down by increasing flexibility) probably wouldn’t have bought any. (Ryanair works the same way, but Wikipedia says their fleet is half the size of Southwest’s.)

But yes, at some point somebody has to say “This airplane has mission-crept beyond all reason.” Expanding the cute little small-field plane of my youth to carry almost as many people and fly almost as far as the continent-spanning big planes of those times is pushing that line. I wonder whether Boeing investigated whether they could lengthen the gear (so the engines wouldn’t have to be out in front, causing instability) and still keep the 737 label. I also wonder about how useful a rear stair is on a plane that large; how many airports are still reasonable markets for that size plane (138 passengers minimum, says Wikipedia) AND don’t have stairs or jetways of their own? (This might be another cheap-out; Googling “737-max stair” gets a claim that Ryanair would have to pay 5 euros per passenger if it used ground-based stairs instead built-ins.)

“The investigation discovered that a loose loop of the very fine magnet wire from the primary rotor coil was trapped in the epoxy which was meant to hold the end cap insulator in place.”

I am not a pilot, but I do motion control for a living, and I have to say I’ve never seen a resolver with that sort of failure. I believe it can happen, but even half-assed quality control should have instantly ash-canned that one.

It should never have been installed on an airplane. J.

I hadn’t actually seen the interesting information that Sylvia presents here; it’s in the back of the final accident report. This sensor failed when the airplane had 895 hours and 443 cycles (which presumably translates to 443 cycles of heating and cooling the sensor). A rigorous QA program at Collins that tests these sensors (and should re-test them whenever the production method changes) could have caught this, but didn’t; this is assuming that the fault is present in more sensors than just this one. And the Collins test procedure that checks only one of the two resolvers is inexplicable!

Poor QA at the manufacturer percolates all the way down the chain. The TV reports I have previously linked make that clear. It feels like this is another symptom of the same, and the other issues with the 737max that have come to light since then tell a similar tale.

The FAA problem is this: this plane required MCAS to be certified. If the AoA sensor does not work, MCAS does not work. And each of these planes now grounded could have a sensor that passed factory tests, but could break at any time. I expect that from this one problem alone, Boeing needs to demonstrate that Collins or another supplier is now producing AoA sensors that are tested to reliably sustain rated hours and cycles, and then install these in the aircraft.

With the 777x, Boeing has another airplane due that adds sweeping new changes to an old design while requiring minimal retraining for the pilots. I wonder how that’s going to turn out.

It still does not explain why the 737 evolved to such an extent that without all these gizmos the aircraft can become uncontrollable.

The “classic” 737 was a beauty to handle. Without the need for any electronic stability augmentation. I have flown it, unofficially. One of my original instructors on the Cessna 310 was a 737 skipper (with a company called Transavia). On a cargo flight – I believe it was a delivery of sheep to the Middle East – I went along in the cocpit. As it happened, the F/O was an old friend of mine and I was allowed to fly the aircraft, including during climb, fairly short after depature and quite far into the initial approach. It was probably a 737-100, in passenger configuration it would have seated about 70. Its handling was viceless. What went wrong between the first model and the Max?

I’m starting to think, Mr. Jakma, that it might be quicker to make a list of the aircraft that you have NOT flown… :) I’ll start: The SR-71 Sled, the B-17 Flying Fortress, the Goodyear Blimp, and the Tupolev TU-144… ;-P Carry on! Great stuff. J.

Jon,

It seems that you have had a peek at my old logbooks?

No it was not that bad but in the “good ole” days we just flew every aircraft that we could lay hands on.

TU144? I was at Le Bourget and saw it crash, not nice!

The biggest machine that I was qualified on was the BAC 1-11 500. It could carry 114 passengers.

The types that I have logged most hours on were: Fokker F27, about 4500, Cessna Citation, probably about 3900 and the PA 18 Super Cub, about 3500 hours. Unoffcially I have flown the 737 (it was a 200 series), the Douglas DC 4, Beech D18 and Curtiss C46 Commando. Although, the captains actually signed my logbook for the DC4.

The smallest one I have flown was a Rollason Turbulent. The microlight had not yet been “invented”, but it was a tiny single seater powered by a modified VW Beetle engine. Actually, “powered” was not the correct word, it should be “underpowered”.

In the ‘seventees I was an active overseas member of the Tiger Club at Redhill; the chairman, Michael Jones, remarked that I flew more than many local members and I should pay full membership.

My employer did a lot of business in the Gatwick (we nicknamed it “Gatport Airwick”) area and I bought a Ford Cortina which a ramp controller, John Hazelgrove, was kind enough to keep for me. Later I managed to persuade my boss that it would be a good idea to position the Cessna 310 which I was flying (PH-STR, later N444ST) to Redhill after customs clearance. It was only a 5 minute “hop” and saved a lot in parking fees at Gatwick Airport. Of course, I then spent the time flying the club aircraft: Tiger Moth, Stampe, Fournier, Turbulent. Can you imagine, I paid £ 7 (yes: seven Pounds !) per hour for a Tiger Moth or a Stampe SV 4. Those were the days!

Enough types, Jon? There are plenty more but I have to call it a stop.

Thanks for your comments.

Wow. You successfully operated a Ford Cortina. ;-)

Now back to the nitty-gritty: The “Max”.

If I understand all that has been written by Sylvia, Chip, Mendel and some other people with more technical knowledge in their little finger than I had in my brain (remember, I only flew them. If anything broke, the technical experts were there to “unbreak” them): It all seems to boil down to

The Max will behave very badly if the MCAS system fails.

There is no back-up in the event that MCAS fails..

Essential components (the AoA sensors) have a manufacturing quality problem that is difficult to identify.

There is little or no way of determining how many of those AoA vanes are defective, tests may be inconclusive.

And so, it seems to me, the entire fleet will remain grounded until Collins can prove beyond reasonable doubt that they can supply AoA vanes that do not have these manufacturing faults.

And also, now that the FAA has been woken up, more or less in the same manner as if a bucket of ice water is thrown over a sleeping dog (forget about the quick brown fox!), it may be necessary to redesign the whole system first before the Max can be returned to the skies.

Any of our experts with a reply? Please !

That’s probably a good summary, thank you!

What I am still uninformed about is how “badly” the airplane handles with MCAS off. Aerodynamic stability by design means that the aircraft should want to pitch down at the onset of stall, and without MCAS, the 737max wouldn’t do that?

Mendel,

As I mentioned, repeatedly: I am merely a “stick handler”, and a long retired one at that.

In my days, handling of any commercial aircraft was determined by their aerodynamic presumed stability. Not even the A320 was different, only the way the inputs were processed, analysed by computers before turned into commands to the controls was different.

The first military aircraft had come onstream that were deliberately unstable. This allowed the designers to give them a previously impossibly high manoeuvrability. At the expense of the need for “fly-by-wire”, computerised systems that could compensate for the limitations of the human brain. Quite a few commercial aircraft also have some form of “artifical feel” built in to overcome a pilot’s natural instincts and reaction time when a different configuration and a different speed resulted in (perceived) different forces needed to operate the controls.

Even the Fokker 50 had something like “artificial feel”: without it, just during the landing flare, the control force would suddenly feel very light and could lead to overcontrol. A simple spring solved it, although if the pilot was aware of a detached or broken spring, there was no problem.

Now, with my limited and rusty knowledge, about the Max:

The engines, much more powerful, also are bigger than previously installed powerplants.

To achieve required minimum ground clearance, this led to the need to place the engines more forward of the wing.

The result will of course be a nose-up momentum at high power settings. To a large extent this can be compensated for by a artifical feel, or a system that automatically compensates by trimming the aircraft. I assume that this is one of the tasks of MCAS, NO I do not get this from reading up. I am, as is my sometimes unfortunate habit, trying to reason this out for myself. Instead of “thinking aloud” I am “thinking whilst typing”, So beware, I could get this one wrong!

So far so good. Now, I refer to something that I read and that stuck in my mind: I read somewhere that in a climb (Speed probably in the order of 250 kts IAS) at of course a still high power setting, the engine nacelles themselves create lift. This independent of the power setting.

The engines being relatively far forward, that would increase the nose-up tendency of the aircraft. All harmless, the pilots are unaware of this. Or, at least, supposed to be unaware. MCAS is taking care of this all and masking the symptoms.

UNLESS and until, of course, a faulty AoA sensor makes the MCAS go totally unreliable, confused, resulting in different contradicting indications and MCAS reacting by giving uncommanded and actually undesired trim inputs.

If the pilots (without full knowledge of what is going on because the manufacturer has decided not to make it mandatory to train them in the way MCAS works and how to recognise a malfuntioning MCAS) do hit on the correct solution, it may already be too late.

My guess still is that by that time the aircraft could have gone into “Mach tuck” where it exceeded its maximum Mach number (MMO) and suffered reversal of controls, as well as rapidly increasing control forces. This lead to the pilots’ inability to take control. The aircraft may have suffered structural damage even before impact. This may not necessarily have resulted in parts detaching in mid-air. If so, components found a distance from the accident scene certainly would have been a tell-tale for the investigators.

OK technical experts, what do you make of this?

I may be totally on the wrong track and will bow to superior knowledge!

I was a software engineer rather than an aeronautic engineer, and I haven’t asked the nephew who did get a degree in aeronautic engineering. But I think I can make a couple of useful comments.

Mendel: the issue was not that the aircraft wouldn’t pitch down when it stalled, but that it was more likely to pitch up and go into a stall, for reasons described above by Rudy. A stalling aircraft

* would be at least be uncomfortable for passengers, costing sales once the pattern became obvious; an alert FAA would say there’s too great a chance of injuring passengers (even if they’re \supposed/ to be wearing seatbelts and have loose objects secured).

* could even be hazardous because a high angle-of-attack is most common during the initial climb, in order to comply with noise regulations against flying low over populated areas. (Rudy — does this match your experience? I noticed a much gentler climb the one time I rode an eastward takeoff from Boston’s seaside airport on a transatlantic flight; since the plane wasn’t going to turn back over land it didn’t have to climb so steeply.) A plane that stalled at a low-enough altitude might not be able to recover.

Note that both crashes happened during the initial climb; the angle of attack is also high during landing, but IIUC not nearly as much so.

Rudy — all of that seems correct to me, including the IIRC-documented decision not to require extra training that would teach pilots both to recognize a malfunction and to handle it correctly (e.g., disable the MCAS, and probably return to the departure airport since the plane was no longer as certified). The normal answer to a critical-path device is to provide backup, AND some way to know when the backup is needed — in time to shift control to the backup — but recognizing such a situation is difficult without training. (I’ve read that some critical systems have three matching paths with circuitry to ignore one if it disagrees with the other two, but I don’t know how common this is even on spacecraft — which have even less margin than the pre-MCAS MAX.)

I do wonder whether offering the training would have been a red flag to the FAA (cf your previous discussion) rather than being treated as just a routine extension of fly-by-wire. I also wonder whether training for mechanics should also have been offered (or even required); the problems there would include making sure that only trained mechanics worked on the sensor and that they were given time to do the job correctly.

A broader response: I think the discussion about sensor QC (or even QA) misses the point; everything is subject to failure, and unseeable failures are more likely in small parts. The issue is that there was not enough planning and action about how to deal with such a failure; there was no training, there were inadequate backups, the software didn’t handle edge cases, and on and on.

Now that the FAA is looking closely, they’re finding more problems, e.g. vulnerability to shutdowns from lightning strikes: https://www.npr.org/2020/02/25/809399855/regulators-issue-another-safety-fix-for-boeings-troubled-737-max-plane

Chip,

I will study your reaction a bit more closely, but for starters: In a climb the aircraft usually accelerates to 250 kts IAS until passing thru FL 250 (25.000 feet) when the speed restriction no longer applies.

During descent the aircraft will go through the opposite: below FL 250 reduce to 250 kts and gradually, as instructed by ATC, through further speed reductions. During the final segments the AoA is actually high, but then the configuration will change: flaps, gear.

The big difference is that, even with the same speed, the nose will be down and, most important, the power setting will be less. So even assuming an amount of lift generated by the nacelles (as I mentioned before, I read that somewhere, it was news to me), at least there would not be the same amount of pitch-up momentum generated by high power.

A stall would be worse than just a bit of discomfort, it can and – if at a low altitude – be life threatening.

When the prototype of the BAC 1-11 went into a deep stall – whereby the elevator section of the T-tail came into the air that was disturbed by the stalled wing – the remedy was the stick shaker and subsequently the stick pusher. The idea was to prevent a stall altogether.

The AoA is of course directly related to stall prevention systems. Did I read that in the Max the shaker operated? It would have presented many conflicting inputs to the unfortunate crew (and the passengers).

Obviously, under the circumstances the stall prevention would not have operated correctly. It is quite possible that a stall followed a high speed dive and resulted in exceeding the MMO.

Sorry, typo: at the end: …a stall would be folled by a high speed dive of course.

I found a blog called “Bjorn’s Corner” on leehamnews.com with two series on “Pitch Stability” and “Fly by steel or electrical wire” that deal with pitch augmentation systems in general and specifically MCAS. What I understand that, with a clean wing, once a 737max reaches ~13° AoA, without augmentation the airplane would keep pitching up with no additional stick pressure, potentially pulling itself into a stall. At this point, the bare airplane is still “controllable”, as the pilot can push the stick to counteract this movement, but it’s certainly unexpected: we want to have to pull more on the stick for the plane to pitch up more. With behaviour like this, if it is too pronounced, the FAA won’t certify the aircraft.

The Airbus has the same issue, but the computers deal with it by design: the sidestock commands translate into fixed amounts of g or pitch rate no matter what flight regimen the aircraft is in, and the computers make that happen. On the 737, the yoke is still connected to the elevators by cables, so that’s not so easy, which is why the solution was to trim the plane nose down if the plane goes to that AoA: the pilot has to pull yoke more to pitch up more, counteracting the trim and not the aerodynamics, which is artificial but feels natural.

Now this whole system is not needed when the slats are out, because then airflow on the wing changes substantially, which already rules out the high-AoA situations like a takeoff climb or a landing flare. Bjorn proposes that if an airliner circles at 30° bank and then encounters some turbulence on top of that, that crucial angle that triggers the MCAs could be reached, and most airline pilots would never see that happen. (What they overlooked is that any cybernetic system is only as good as its data, and with a sensor fault…)

So if you flew a 737max, I expect you’d think it handled just as well as the older models did, unless you were taking it to more than 13° AoA with no flaps and no MCAS!

@CHip: I seem to remember the lightning safety issue from one of the documentaries I saw; they reported that Boeing solved the issue by firing the engineer who brought it to their attention.

Very interesting stuff, thanks to all. A good exchange, thank you Sylvia.

And yes, a good way to solve a problem is “shoot the messenger”.

The House Transportation Committee has published a prelimary report on the 737max issues; I found their press release, which has a link to the full document: https://transportation.house.gov/news/press-releases/nearly-one-year-after-launching-its-boeing-737-max-investigation-house-transportation-committee-issues-preliminary-investigative-findings-